简述:

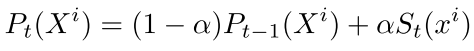

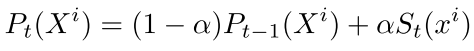

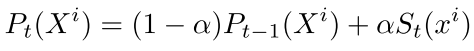

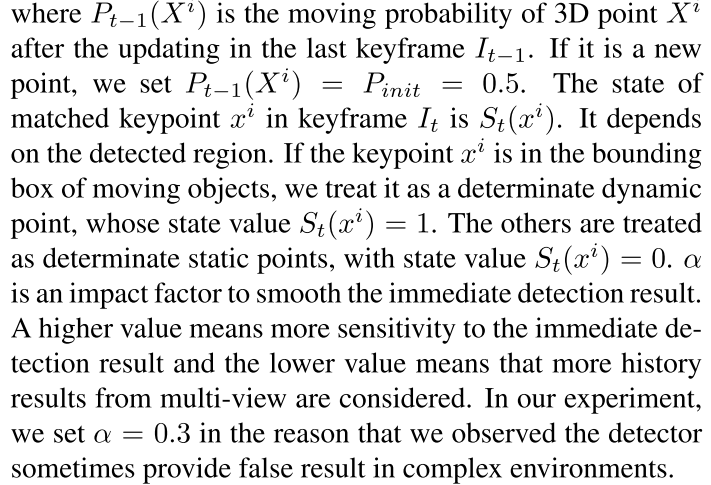

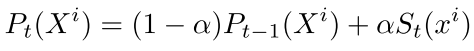

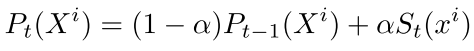

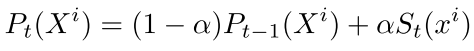

Xi: 3D point

Pt−1(Xi) : the moving probability of Xi

St(xi): the state of matched keypoint xi in keyframe $I_t$

St(xi) = 1: determinate dynamic point

St(xi) = 0: determinate static points

$\alpha$: impact factor to smooth the immediate detection result

讨论:$\alpha$如何确定的?

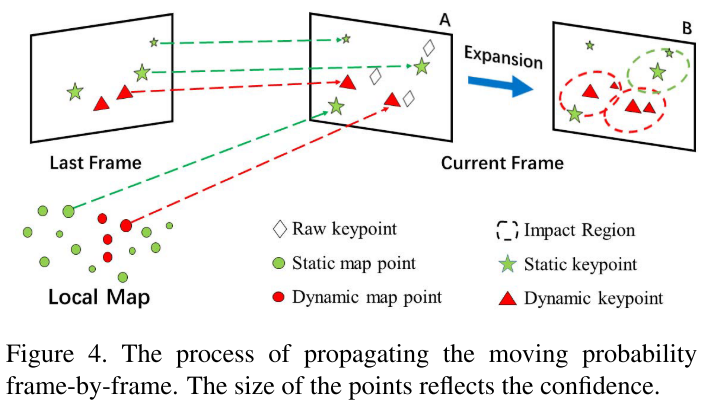

# Moving Probability Propagation

In the tracking thread, they estimate the moving probability of every key point frame by frame via two operations:

1) feature matching

2) matching point expansion

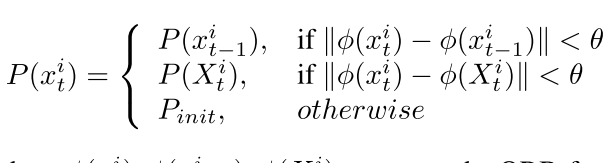

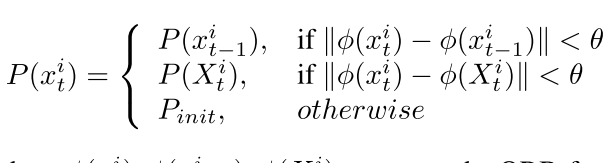

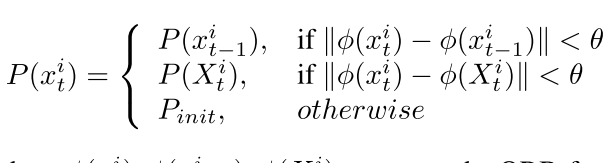

## **Feature matching**

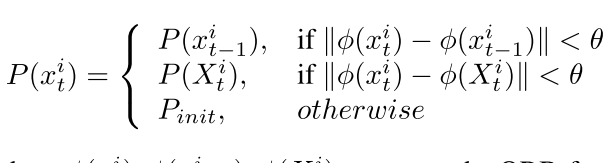

$\phi$: ORB feature 这里指的是什么,距离吗?

第一行为匹配相临帧

第二行为匹配local map

第三行,其它设为初始值

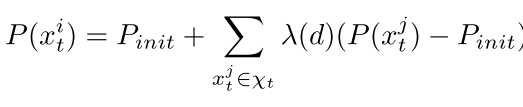

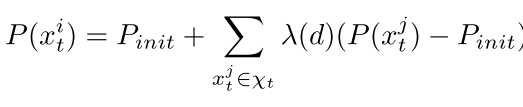

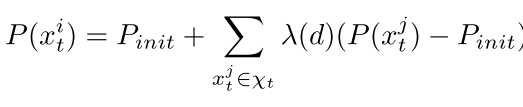

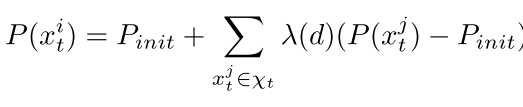

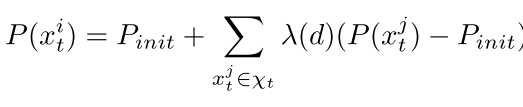

## **matching point expansion**

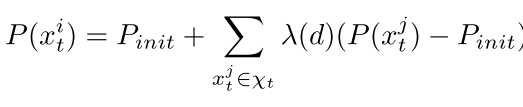

If a point is impacted by more than one high-confidence point, we will sum all the impact of these neighboring high-confidence points.

Pinit: the initial moving probability

λ(d): distance factor

C: constant value 如何确定的?

# Mapping Objects Reconstructing

Reconstructing the environment in a map is the core ability of SLAM system, but most of the maps are built in pixel or low-level features without semantics. Recently, with the advancement of object detection, creating semantic map supported supported by object detector becomes more promising.

传统的地图缺少主义信息,现在很多研究通过结体目标物体检测来建立物体级别的地图。

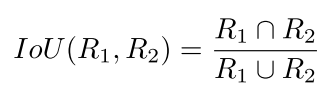

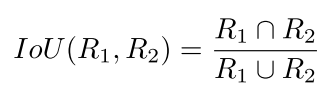

## Predicting Region ID

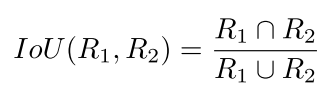

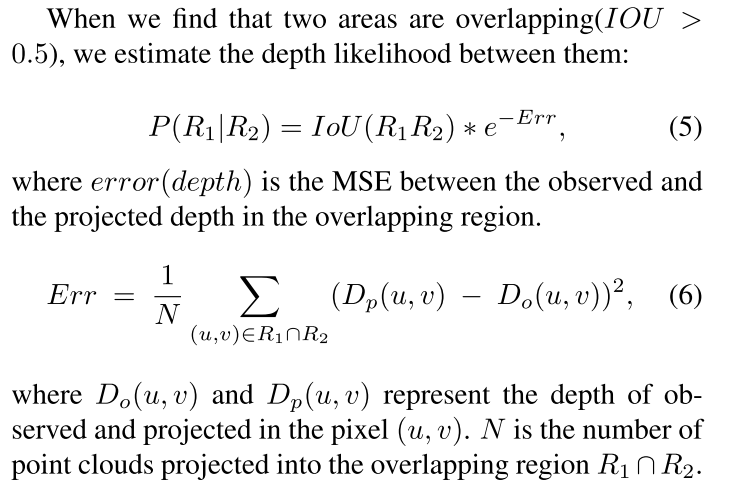

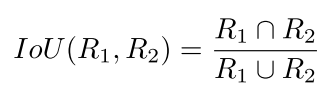

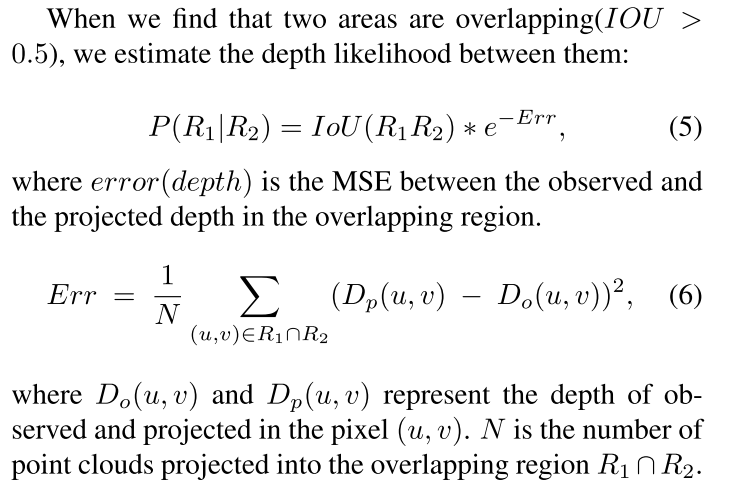

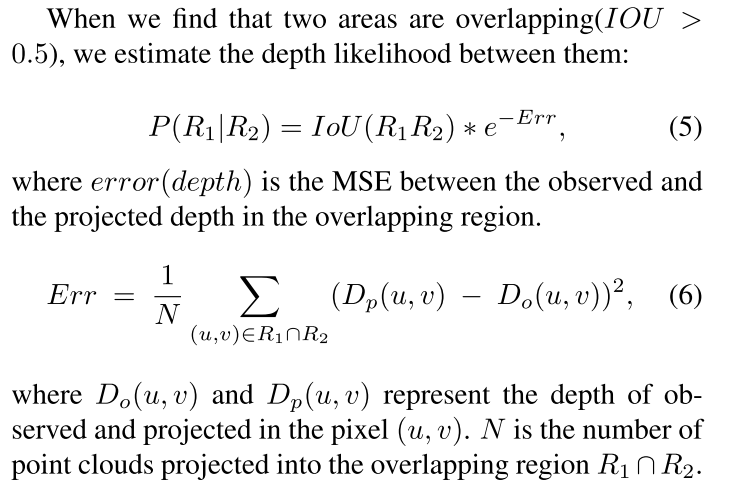

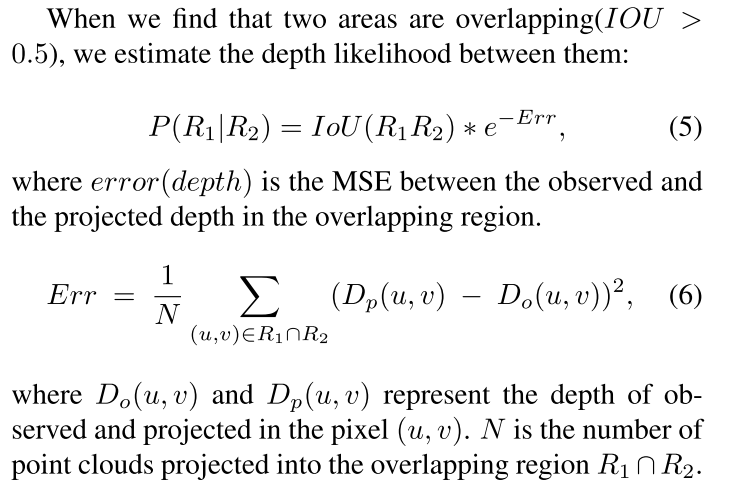

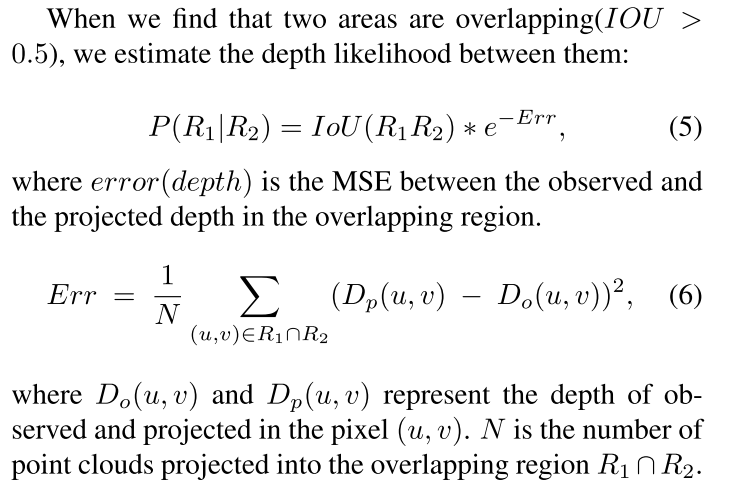

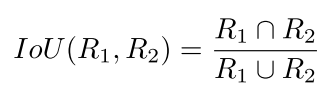

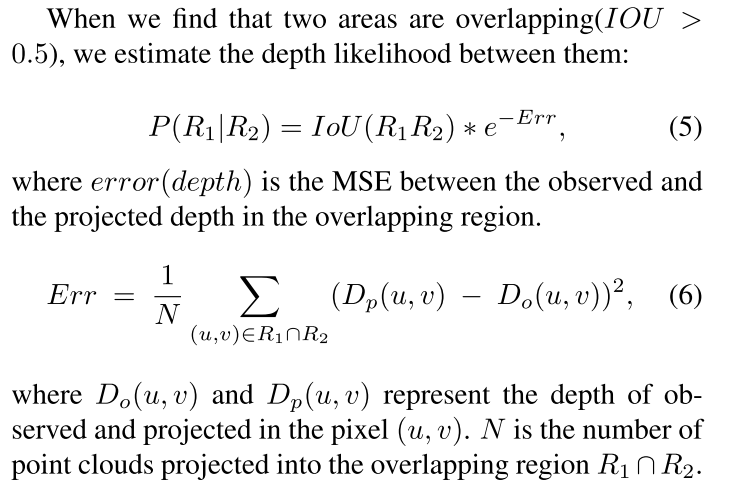

**Purpose**: to find corresponding object ID in the object map or generate a new ID if it is detected for the first timeThe object ID prediction is based on the **geometry assumption** that the **area of projection and detection should overlap** if both areas belong to the same object. So we compute the intersection over union(IOU) between two areas to represent the level of overlapping:

R1 is the area from detection

R2 is the area from the object map projection

R1很好理解,R2是如何投影的呢?或许是对区域内的点(local map)进行了3D到2D的投影变换。

$P(R_1|R_2)$ 跟depth likelihood 有什么关系,为什么要计算depth likelihood?

这个概率是个条件概率,是什么含义?

depth likelihood 指的是什么?

## Cutting Background

They cut the background utilizing **Grab-cut algorithm**, serving points **projected area** as **seed** of foreground and points outside of the bounding box as background.

Then they get a segment mask of the target object from the bounding box, after three iterations.

## Reconstruction

Leveraging the object mask, they create object point cloud assigned with object ID and filter the noise point in 3D space. Finally, they transform the object point cloud into the world coordinate with the camera pose and insert them to the object map.

# SLAM-enhanced Detector

Semantic map can be used not only for optimizing trajectory estimation, but also improving object detector.

个人觉的这个标题容易让人误解,这里所描述的并不是真正意义上的提高object detector. 并没有对object detector算法 进行改进提高,其实跟object detector没有多少关系。

这篇论文所描述的是通过不断更新local map中的物体标签,并结合object detector得到的标签,使得Object map更具有空间连续性,更精确一些。

显然这个过程并不是提高Object detector的过程,顶多算是个浅层融合。

## Hard Example Mining

we fine-tune the original SSD network to boost detection performance in similar scenes.

这个过程是针对特殊几个物体的,虽然可以提高SLAM在极特别场景中的精确度。

但是损害了泛化性,对一般的场景就可能不适应。

如果不进行Hard Example Mining,本文的性能会如何,作者似乎没有说明。

# 讨论

1. 本文说这个算法是针对Unknown environment,但是用到的大都是比较特殊的个例,物体都是已知的, 通过这些已知先验知识来去除可能的动态物体。在未知环境是不一定有这些物体的。

2. 很多所谓的动态物体可不一定是运动的,比如实验室里的研究员,天天都是坐着不动的。

3. SLAM很重要的一个特点是针对未知环境的Unknown environment.在未知环境中去进行 Hard Example Mining是不大现实的。

4. SLAM-enhanced Detector改成SLAM-enhanced Object Map我觉的更好些,这里写得个人感觉跟Object Detector没多个关系,并不存在明显的优化提高关系。如果是说将Object Map投影到2D,然后做为Object Detector的输入参数来提高Object Detector的话,那是提高Object Detector.

5. 文章没有给出完整的Object Map

6. 实验结果有的要比原始的ORB SLAM2要差,原因何在?

7. 本文是否有在几何层级来进行运动物体检测,是否对未定义的物体进行了运动检测,文章中是虽然用了RANSAC, 但它不是运动检测。

8. 文章中用了很多参数,但基本上没说如何给定这些参数,调整这些参数对实验结果又会有多大影响?

本人并不能完全理解文章中的内容,以上仅为个人观点。

# References

Zhong, F., Wang, S., Zhang, Z., Chen, C., & Wang, Y. (2018). Detect-SLAM: Making Object Detection and SLAM Mutually Beneficial. *Proceedings - 2018 IEEE Winter Conference on Applications of Computer Vision, WACV 2018*, *2018*-*Janua*, 1001–1010. https://doi.org/10.1109/WACV.2018.00115

简述:

Xi: 3D point

Pt−1(Xi) : the moving probability of Xi

St(xi): the state of matched keypoint xi in keyframe $I_t$

St(xi) = 1: determinate dynamic point

St(xi) = 0: determinate static points

$\alpha$: impact factor to smooth the immediate detection result

讨论:$\alpha$如何确定的?

# Moving Probability Propagation

In the tracking thread, they estimate the moving probability of every key point frame by frame via two operations:

1) feature matching

2) matching point expansion

## **Feature matching**

$\phi$: ORB feature 这里指的是什么,距离吗?

第一行为匹配相临帧

第二行为匹配local map

第三行,其它设为初始值

## **matching point expansion**

If a point is impacted by more than one high-confidence point, we will sum all the impact of these neighboring high-confidence points.

Pinit: the initial moving probability

λ(d): distance factor

C: constant value 如何确定的?

# Mapping Objects Reconstructing

Reconstructing the environment in a map is the core ability of SLAM system, but most of the maps are built in pixel or low-level features without semantics. Recently, with the advancement of object detection, creating semantic map supported supported by object detector becomes more promising.

传统的地图缺少主义信息,现在很多研究通过结体目标物体检测来建立物体级别的地图。

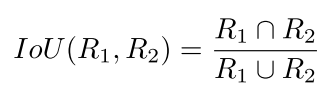

## Predicting Region ID

**Purpose**: to find corresponding object ID in the object map or generate a new ID if it is detected for the first timeThe object ID prediction is based on the **geometry assumption** that the **area of projection and detection should overlap** if both areas belong to the same object. So we compute the intersection over union(IOU) between two areas to represent the level of overlapping:

R1 is the area from detection

R2 is the area from the object map projection

R1很好理解,R2是如何投影的呢?或许是对区域内的点(local map)进行了3D到2D的投影变换。

$P(R_1|R_2)$ 跟depth likelihood 有什么关系,为什么要计算depth likelihood?

这个概率是个条件概率,是什么含义?

depth likelihood 指的是什么?

## Cutting Background

They cut the background utilizing **Grab-cut algorithm**, serving points **projected area** as **seed** of foreground and points outside of the bounding box as background.

Then they get a segment mask of the target object from the bounding box, after three iterations.

## Reconstruction

Leveraging the object mask, they create object point cloud assigned with object ID and filter the noise point in 3D space. Finally, they transform the object point cloud into the world coordinate with the camera pose and insert them to the object map.

# SLAM-enhanced Detector

Semantic map can be used not only for optimizing trajectory estimation, but also improving object detector.

个人觉的这个标题容易让人误解,这里所描述的并不是真正意义上的提高object detector. 并没有对object detector算法 进行改进提高,其实跟object detector没有多少关系。

这篇论文所描述的是通过不断更新local map中的物体标签,并结合object detector得到的标签,使得Object map更具有空间连续性,更精确一些。

显然这个过程并不是提高Object detector的过程,顶多算是个浅层融合。

## Hard Example Mining

we fine-tune the original SSD network to boost detection performance in similar scenes.

这个过程是针对特殊几个物体的,虽然可以提高SLAM在极特别场景中的精确度。

但是损害了泛化性,对一般的场景就可能不适应。

如果不进行Hard Example Mining,本文的性能会如何,作者似乎没有说明。

# 讨论

1. 本文说这个算法是针对Unknown environment,但是用到的大都是比较特殊的个例,物体都是已知的, 通过这些已知先验知识来去除可能的动态物体。在未知环境是不一定有这些物体的。

2. 很多所谓的动态物体可不一定是运动的,比如实验室里的研究员,天天都是坐着不动的。

3. SLAM很重要的一个特点是针对未知环境的Unknown environment.在未知环境中去进行 Hard Example Mining是不大现实的。

4. SLAM-enhanced Detector改成SLAM-enhanced Object Map我觉的更好些,这里写得个人感觉跟Object Detector没多个关系,并不存在明显的优化提高关系。如果是说将Object Map投影到2D,然后做为Object Detector的输入参数来提高Object Detector的话,那是提高Object Detector.

5. 文章没有给出完整的Object Map

6. 实验结果有的要比原始的ORB SLAM2要差,原因何在?

7. 本文是否有在几何层级来进行运动物体检测,是否对未定义的物体进行了运动检测,文章中是虽然用了RANSAC, 但它不是运动检测。

8. 文章中用了很多参数,但基本上没说如何给定这些参数,调整这些参数对实验结果又会有多大影响?

本人并不能完全理解文章中的内容,以上仅为个人观点。

# References

Zhong, F., Wang, S., Zhang, Z., Chen, C., & Wang, Y. (2018). Detect-SLAM: Making Object Detection and SLAM Mutually Beneficial. *Proceedings - 2018 IEEE Winter Conference on Applications of Computer Vision, WACV 2018*, *2018*-*Janua*, 1001–1010. https://doi.org/10.1109/WACV.2018.00115

Detect-SLAM: Making Object Detection and SLAM Mutually Beneficial (Reading Seminar)

# Synopsis

- Study Notes of the paper: Detect-SLAM

Zhong, F., Wang, S., Zhang, Z., Chen, C., & Wang, Y. (2018). Detect-SLAM: Making Object Detection and SLAM Mutually Beneficial. *Proceedings - 2018 IEEE Winter Conference on Applications of Computer Vision, WACV 2018*, *2018*-*Janua*, 1001–1010. https://doi.org/10.1109/WACV.2018.00115

# Detect-SLAM: Making Object Detection and SLAM Mutually Beneficial

## Demo

---

- [WACV18: Detect-SLAM: Making Object Detection and SLAM Mutually Beneficial ](https://www.youtube.com/watch?v=eqJiyU9ebaY)

## Abstract

Although significant progress has been made in SLAM and object detection in recent years, there are still a series of challenges for both tasks, e.g.,

SLAM中挑战性的任务:

- SLAM in dynamic environments

- detecting objects in complex environments.

To address these challenges, we present a novel robotic vision system, which integrates **SLAM** with a deep neural network-based **object detector** to make the two functions **mutually beneficial.** The proposed system facilitates a robot to accomplish tasks reliably and efficiently in an unknown and dynamic environment.

提案:

SLAM+ object detector

贡献:

Experimental results show that compare to the state-of-the-art robotic vision systems, the pro- posed system has three advantages:

i) it greatly **improves the accuracy and robustness of SLAM** in **dynamic environments** by **removing unreliable features** from moving objects leveraging the object detector,

ii) it builds an **instance-level semantic map** ofthe environment in an online fashion using the synergy of the two functions for further semantic applications;

iii) it **improves the object detector** so that it can detect/recognize objects effectively under more challenging conditions such as unusual viewpoints, poor lighting condi- tion, and motion blur, by leveraging the object map.

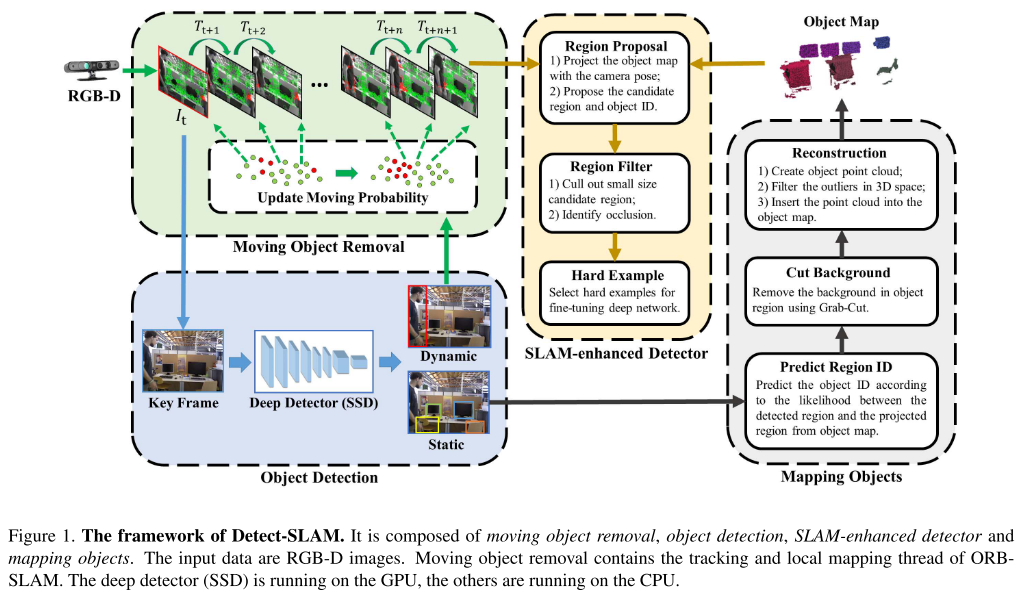

# Detect-SLAM

Detect-SLAM is built on ORB-SLAM2, which has three main parallel threads: Tracking, Local Mapping, and Loop Closing. Compared to ORB-SLAM2, the **Detect-SLAM includes three new processes**:

1. **Moving objects removal,** filtering out **features** that are associated with moving objects.

2. **Object Mapping**, reconstructing the **static objects** that are detected in the **keyframes**. The object map is composed of dense point clouds assigned with **object ID**.

3. **SLAM-enhanced Detector**, exploiting the object map as prior knowledge to improve detection performance in challenging environments.

主要模块:

1. 移除动态物体上的不稳定特征点

2. 利用关键帧上的静态物体建立物体实例地图

3. 使用Object Map作为先验知识并结合SLAM的位姿来提升物体检测的性能

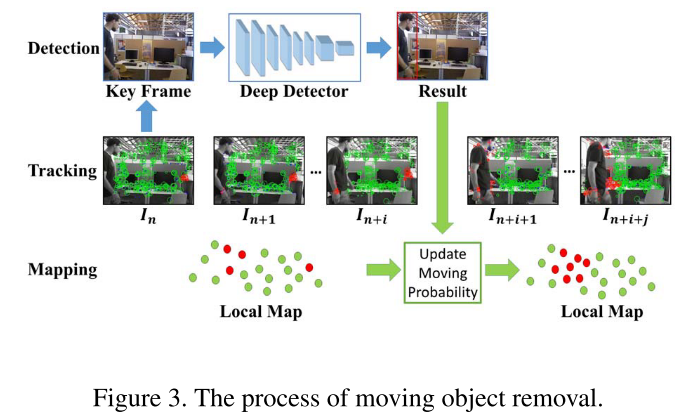

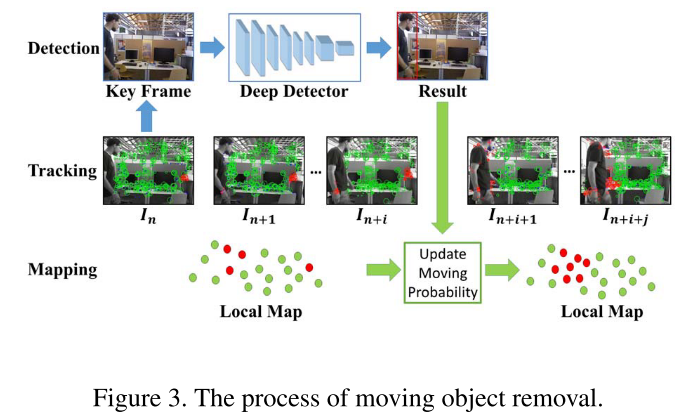

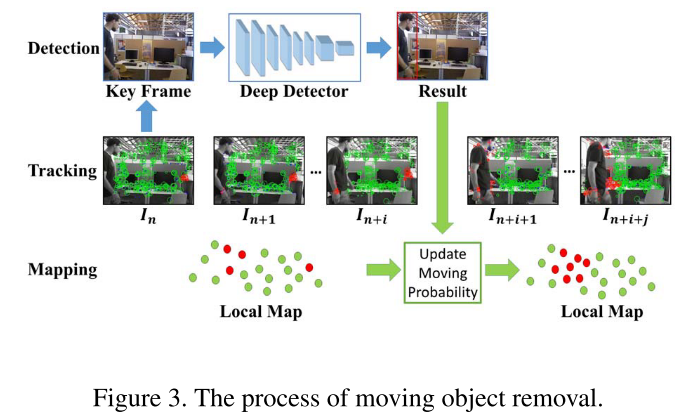

# Moving Objects Removal

> For moving object removal, we modify the Tracking and Local Mapping thread in ORB-SLAM2 to **eliminate the negative impact of moving objects**, shown in Fig. 3. Note that the moving objects in this process belong to **movable categories** which are **likely to move currently or in times to come**, such as people, dog, cat, and car. For instance, once a person is detected, no matter walking or standing, we regard it as a **potentially moving object** and remove the **features** belonging to the region in the image where the person was detected.

消除动态物体对SLAM的影响,本文假设了可能的动态物体与静态物体的集合,属于先验知识。动态物体集体上的特征点都会实移除。

讨论: 这个假设合理吗,动态物体的分类标准是什么?

> One key issue concerning detection-enhanced SLAM is the efficiency of object detection.

>

> The object detection process should be **fast enough** to enable **per-frame detection in real-time,** so that the unreliable regions can be removed during the per-frame tracking process of the SLAM.

>

> However, naively applying the detector in each frame is not a viable option, as even the state-of-the-art SSD method runs only at about **3 FPS** in our preliminary experiments.

问题:SSD比较慢,不适合逐帧检测

> In this section, we propose two strategies to effectively overcome this issue:

>

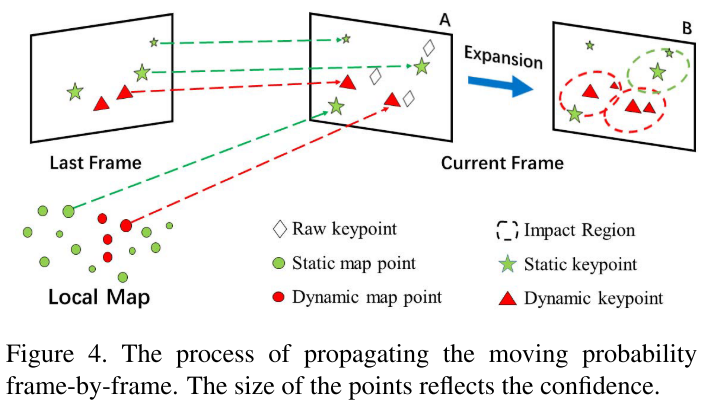

> 1) detect moving objects in **keyframes** only and then **update the moving probability of points in the local map** to accelerate the tracking thread

>

> 2) **propagate the moving probability** by **feature matching** and **matching points expansion** in the tracking thread to remove the features extracted on moving objects efficiently before camera pose estimation.

解决方法:

1. 只在关键帧上进行目标检测

2. 在Local map上更新moving probability

3. 通过特征点匹配与macing points expansion来传递moving probability

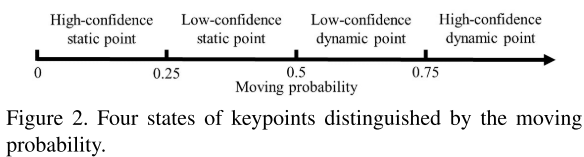

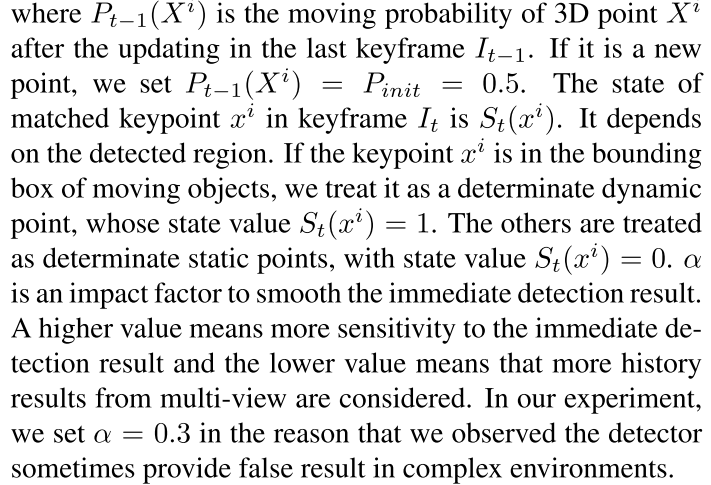

**Moving Probability**: the probability of a feature point belonging to a moving object.

They distinguish these key points into **four states** according to the moving probability.

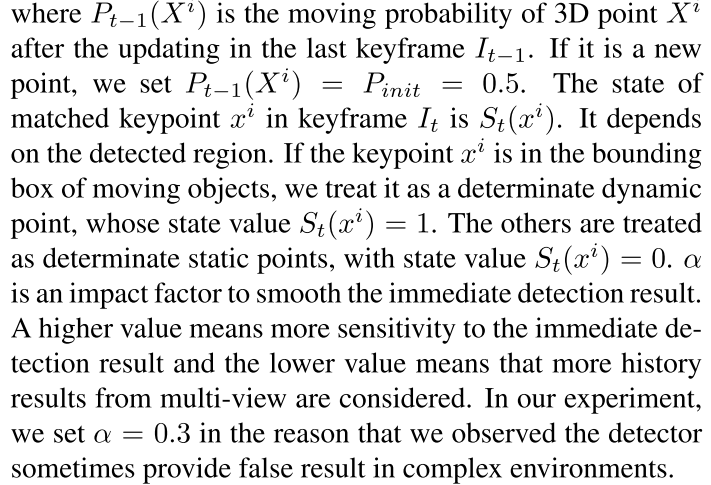

Both **high-confidence points** are used in matching point expansion to **propagate the moving probability to those neighboring unmatched points.**

After every point gets the moving probability via propagating, they remove all of dynamic points and using RANSAC to filter other outliers for the pose estimation.

匹配的点可以直接传递概率,对于未匹配的点,如何传递概率?

利用空间一致性假设,使用确定性比较大的点来更新未匹配点的概率。

# Update Moving Probability

简述:

Xi: 3D point

Pt−1(Xi) : the moving probability of Xi

St(xi): the state of matched keypoint xi in keyframe $I_t$

St(xi) = 1: determinate dynamic point

St(xi) = 0: determinate static points

$\alpha$: impact factor to smooth the immediate detection result

讨论:$\alpha$如何确定的?

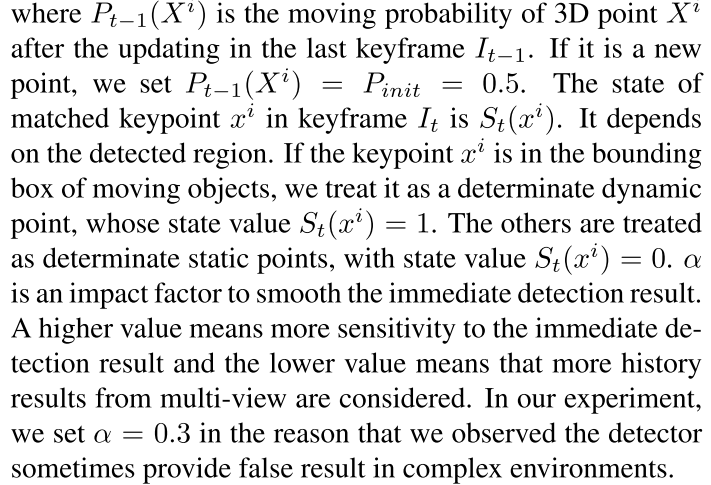

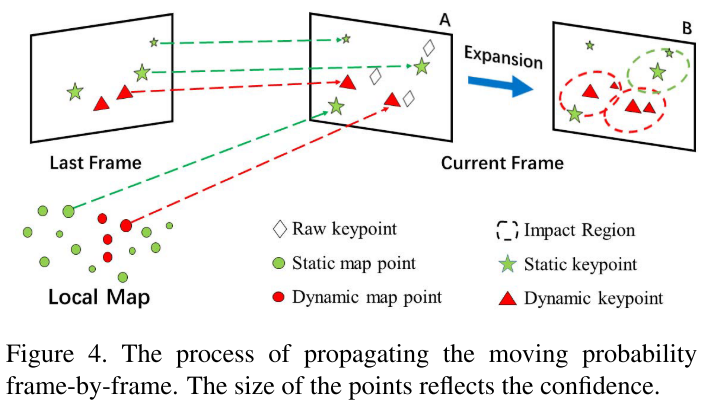

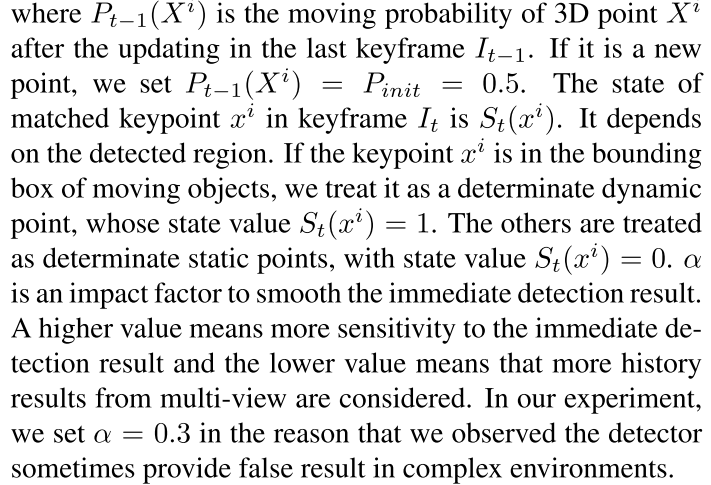

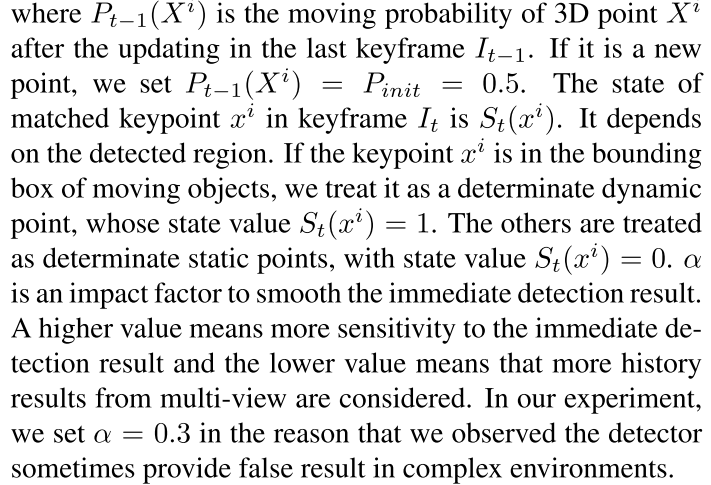

# Moving Probability Propagation

In the tracking thread, they estimate the moving probability of every key point frame by frame via two operations:

1) feature matching

2) matching point expansion

## **Feature matching**

$\phi$: ORB feature 这里指的是什么,距离吗?

第一行为匹配相临帧

第二行为匹配local map

第三行,其它设为初始值

## **matching point expansion**

If a point is impacted by more than one high-confidence point, we will sum all the impact of these neighboring high-confidence points.

Pinit: the initial moving probability

λ(d): distance factor

C: constant value 如何确定的?

# Mapping Objects Reconstructing

Reconstructing the environment in a map is the core ability of SLAM system, but most of the maps are built in pixel or low-level features without semantics. Recently, with the advancement of object detection, creating semantic map supported supported by object detector becomes more promising.

传统的地图缺少主义信息,现在很多研究通过结体目标物体检测来建立物体级别的地图。

## Predicting Region ID

**Purpose**: to find corresponding object ID in the object map or generate a new ID if it is detected for the first timeThe object ID prediction is based on the **geometry assumption** that the **area of projection and detection should overlap** if both areas belong to the same object. So we compute the intersection over union(IOU) between two areas to represent the level of overlapping:

R1 is the area from detection

R2 is the area from the object map projection

R1很好理解,R2是如何投影的呢?或许是对区域内的点(local map)进行了3D到2D的投影变换。

$P(R_1|R_2)$ 跟depth likelihood 有什么关系,为什么要计算depth likelihood?

这个概率是个条件概率,是什么含义?

depth likelihood 指的是什么?

## Cutting Background

They cut the background utilizing **Grab-cut algorithm**, serving points **projected area** as **seed** of foreground and points outside of the bounding box as background.

Then they get a segment mask of the target object from the bounding box, after three iterations.

## Reconstruction

Leveraging the object mask, they create object point cloud assigned with object ID and filter the noise point in 3D space. Finally, they transform the object point cloud into the world coordinate with the camera pose and insert them to the object map.

# SLAM-enhanced Detector

Semantic map can be used not only for optimizing trajectory estimation, but also improving object detector.

个人觉的这个标题容易让人误解,这里所描述的并不是真正意义上的提高object detector. 并没有对object detector算法 进行改进提高,其实跟object detector没有多少关系。

这篇论文所描述的是通过不断更新local map中的物体标签,并结合object detector得到的标签,使得Object map更具有空间连续性,更精确一些。

显然这个过程并不是提高Object detector的过程,顶多算是个浅层融合。

## Hard Example Mining

we fine-tune the original SSD network to boost detection performance in similar scenes.

这个过程是针对特殊几个物体的,虽然可以提高SLAM在极特别场景中的精确度。

但是损害了泛化性,对一般的场景就可能不适应。

如果不进行Hard Example Mining,本文的性能会如何,作者似乎没有说明。

# 讨论

1. 本文说这个算法是针对Unknown environment,但是用到的大都是比较特殊的个例,物体都是已知的, 通过这些已知先验知识来去除可能的动态物体。在未知环境是不一定有这些物体的。

2. 很多所谓的动态物体可不一定是运动的,比如实验室里的研究员,天天都是坐着不动的。

3. SLAM很重要的一个特点是针对未知环境的Unknown environment.在未知环境中去进行 Hard Example Mining是不大现实的。

4. SLAM-enhanced Detector改成SLAM-enhanced Object Map我觉的更好些,这里写得个人感觉跟Object Detector没多个关系,并不存在明显的优化提高关系。如果是说将Object Map投影到2D,然后做为Object Detector的输入参数来提高Object Detector的话,那是提高Object Detector.

5. 文章没有给出完整的Object Map

6. 实验结果有的要比原始的ORB SLAM2要差,原因何在?

7. 本文是否有在几何层级来进行运动物体检测,是否对未定义的物体进行了运动检测,文章中是虽然用了RANSAC, 但它不是运动检测。

8. 文章中用了很多参数,但基本上没说如何给定这些参数,调整这些参数对实验结果又会有多大影响?

本人并不能完全理解文章中的内容,以上仅为个人观点。

# References

Zhong, F., Wang, S., Zhang, Z., Chen, C., & Wang, Y. (2018). Detect-SLAM: Making Object Detection and SLAM Mutually Beneficial. *Proceedings - 2018 IEEE Winter Conference on Applications of Computer Vision, WACV 2018*, *2018*-*Janua*, 1001–1010. https://doi.org/10.1109/WACV.2018.00115

简述:

Xi: 3D point

Pt−1(Xi) : the moving probability of Xi

St(xi): the state of matched keypoint xi in keyframe $I_t$

St(xi) = 1: determinate dynamic point

St(xi) = 0: determinate static points

$\alpha$: impact factor to smooth the immediate detection result

讨论:$\alpha$如何确定的?

# Moving Probability Propagation

In the tracking thread, they estimate the moving probability of every key point frame by frame via two operations:

1) feature matching

2) matching point expansion

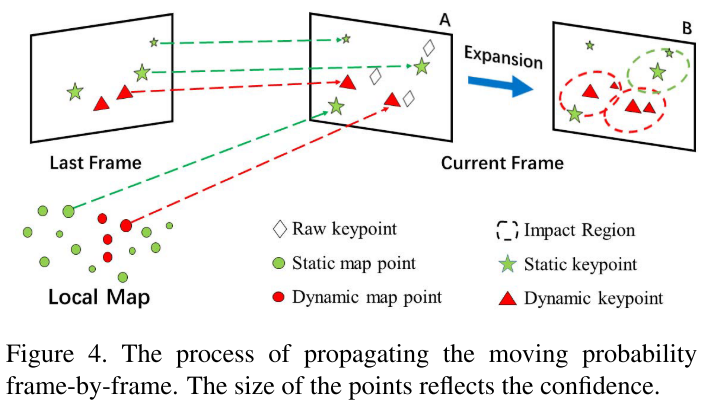

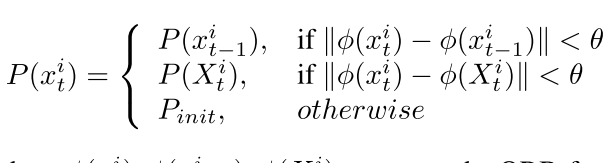

## **Feature matching**

$\phi$: ORB feature 这里指的是什么,距离吗?

第一行为匹配相临帧

第二行为匹配local map

第三行,其它设为初始值

## **matching point expansion**

If a point is impacted by more than one high-confidence point, we will sum all the impact of these neighboring high-confidence points.

Pinit: the initial moving probability

λ(d): distance factor

C: constant value 如何确定的?

# Mapping Objects Reconstructing

Reconstructing the environment in a map is the core ability of SLAM system, but most of the maps are built in pixel or low-level features without semantics. Recently, with the advancement of object detection, creating semantic map supported supported by object detector becomes more promising.

传统的地图缺少主义信息,现在很多研究通过结体目标物体检测来建立物体级别的地图。

## Predicting Region ID

**Purpose**: to find corresponding object ID in the object map or generate a new ID if it is detected for the first timeThe object ID prediction is based on the **geometry assumption** that the **area of projection and detection should overlap** if both areas belong to the same object. So we compute the intersection over union(IOU) between two areas to represent the level of overlapping:

R1 is the area from detection

R2 is the area from the object map projection

R1很好理解,R2是如何投影的呢?或许是对区域内的点(local map)进行了3D到2D的投影变换。

$P(R_1|R_2)$ 跟depth likelihood 有什么关系,为什么要计算depth likelihood?

这个概率是个条件概率,是什么含义?

depth likelihood 指的是什么?

## Cutting Background

They cut the background utilizing **Grab-cut algorithm**, serving points **projected area** as **seed** of foreground and points outside of the bounding box as background.

Then they get a segment mask of the target object from the bounding box, after three iterations.

## Reconstruction

Leveraging the object mask, they create object point cloud assigned with object ID and filter the noise point in 3D space. Finally, they transform the object point cloud into the world coordinate with the camera pose and insert them to the object map.

# SLAM-enhanced Detector

Semantic map can be used not only for optimizing trajectory estimation, but also improving object detector.

个人觉的这个标题容易让人误解,这里所描述的并不是真正意义上的提高object detector. 并没有对object detector算法 进行改进提高,其实跟object detector没有多少关系。

这篇论文所描述的是通过不断更新local map中的物体标签,并结合object detector得到的标签,使得Object map更具有空间连续性,更精确一些。

显然这个过程并不是提高Object detector的过程,顶多算是个浅层融合。

## Hard Example Mining

we fine-tune the original SSD network to boost detection performance in similar scenes.

这个过程是针对特殊几个物体的,虽然可以提高SLAM在极特别场景中的精确度。

但是损害了泛化性,对一般的场景就可能不适应。

如果不进行Hard Example Mining,本文的性能会如何,作者似乎没有说明。

# 讨论

1. 本文说这个算法是针对Unknown environment,但是用到的大都是比较特殊的个例,物体都是已知的, 通过这些已知先验知识来去除可能的动态物体。在未知环境是不一定有这些物体的。

2. 很多所谓的动态物体可不一定是运动的,比如实验室里的研究员,天天都是坐着不动的。

3. SLAM很重要的一个特点是针对未知环境的Unknown environment.在未知环境中去进行 Hard Example Mining是不大现实的。

4. SLAM-enhanced Detector改成SLAM-enhanced Object Map我觉的更好些,这里写得个人感觉跟Object Detector没多个关系,并不存在明显的优化提高关系。如果是说将Object Map投影到2D,然后做为Object Detector的输入参数来提高Object Detector的话,那是提高Object Detector.

5. 文章没有给出完整的Object Map

6. 实验结果有的要比原始的ORB SLAM2要差,原因何在?

7. 本文是否有在几何层级来进行运动物体检测,是否对未定义的物体进行了运动检测,文章中是虽然用了RANSAC, 但它不是运动检测。

8. 文章中用了很多参数,但基本上没说如何给定这些参数,调整这些参数对实验结果又会有多大影响?

本人并不能完全理解文章中的内容,以上仅为个人观点。

# References

Zhong, F., Wang, S., Zhang, Z., Chen, C., & Wang, Y. (2018). Detect-SLAM: Making Object Detection and SLAM Mutually Beneficial. *Proceedings - 2018 IEEE Winter Conference on Applications of Computer Vision, WACV 2018*, *2018*-*Janua*, 1001–1010. https://doi.org/10.1109/WACV.2018.00115

简述:

Xi: 3D point

Pt−1(Xi) : the moving probability of Xi

St(xi): the state of matched keypoint xi in keyframe $I_t$

St(xi) = 1: determinate dynamic point

St(xi) = 0: determinate static points

$\alpha$: impact factor to smooth the immediate detection result

讨论:$\alpha$如何确定的?

# Moving Probability Propagation

In the tracking thread, they estimate the moving probability of every key point frame by frame via two operations:

1) feature matching

2) matching point expansion

## **Feature matching**

$\phi$: ORB feature 这里指的是什么,距离吗?

第一行为匹配相临帧

第二行为匹配local map

第三行,其它设为初始值

## **matching point expansion**

If a point is impacted by more than one high-confidence point, we will sum all the impact of these neighboring high-confidence points.

Pinit: the initial moving probability

λ(d): distance factor

C: constant value 如何确定的?

# Mapping Objects Reconstructing

Reconstructing the environment in a map is the core ability of SLAM system, but most of the maps are built in pixel or low-level features without semantics. Recently, with the advancement of object detection, creating semantic map supported supported by object detector becomes more promising.

传统的地图缺少主义信息,现在很多研究通过结体目标物体检测来建立物体级别的地图。

## Predicting Region ID

**Purpose**: to find corresponding object ID in the object map or generate a new ID if it is detected for the first timeThe object ID prediction is based on the **geometry assumption** that the **area of projection and detection should overlap** if both areas belong to the same object. So we compute the intersection over union(IOU) between two areas to represent the level of overlapping:

R1 is the area from detection

R2 is the area from the object map projection

R1很好理解,R2是如何投影的呢?或许是对区域内的点(local map)进行了3D到2D的投影变换。

$P(R_1|R_2)$ 跟depth likelihood 有什么关系,为什么要计算depth likelihood?

这个概率是个条件概率,是什么含义?

depth likelihood 指的是什么?

## Cutting Background

They cut the background utilizing **Grab-cut algorithm**, serving points **projected area** as **seed** of foreground and points outside of the bounding box as background.

Then they get a segment mask of the target object from the bounding box, after three iterations.

## Reconstruction

Leveraging the object mask, they create object point cloud assigned with object ID and filter the noise point in 3D space. Finally, they transform the object point cloud into the world coordinate with the camera pose and insert them to the object map.

# SLAM-enhanced Detector

Semantic map can be used not only for optimizing trajectory estimation, but also improving object detector.

个人觉的这个标题容易让人误解,这里所描述的并不是真正意义上的提高object detector. 并没有对object detector算法 进行改进提高,其实跟object detector没有多少关系。

这篇论文所描述的是通过不断更新local map中的物体标签,并结合object detector得到的标签,使得Object map更具有空间连续性,更精确一些。

显然这个过程并不是提高Object detector的过程,顶多算是个浅层融合。

## Hard Example Mining

we fine-tune the original SSD network to boost detection performance in similar scenes.

这个过程是针对特殊几个物体的,虽然可以提高SLAM在极特别场景中的精确度。

但是损害了泛化性,对一般的场景就可能不适应。

如果不进行Hard Example Mining,本文的性能会如何,作者似乎没有说明。

# 讨论

1. 本文说这个算法是针对Unknown environment,但是用到的大都是比较特殊的个例,物体都是已知的, 通过这些已知先验知识来去除可能的动态物体。在未知环境是不一定有这些物体的。

2. 很多所谓的动态物体可不一定是运动的,比如实验室里的研究员,天天都是坐着不动的。

3. SLAM很重要的一个特点是针对未知环境的Unknown environment.在未知环境中去进行 Hard Example Mining是不大现实的。

4. SLAM-enhanced Detector改成SLAM-enhanced Object Map我觉的更好些,这里写得个人感觉跟Object Detector没多个关系,并不存在明显的优化提高关系。如果是说将Object Map投影到2D,然后做为Object Detector的输入参数来提高Object Detector的话,那是提高Object Detector.

5. 文章没有给出完整的Object Map

6. 实验结果有的要比原始的ORB SLAM2要差,原因何在?

7. 本文是否有在几何层级来进行运动物体检测,是否对未定义的物体进行了运动检测,文章中是虽然用了RANSAC, 但它不是运动检测。

8. 文章中用了很多参数,但基本上没说如何给定这些参数,调整这些参数对实验结果又会有多大影响?

本人并不能完全理解文章中的内容,以上仅为个人观点。

# References

Zhong, F., Wang, S., Zhang, Z., Chen, C., & Wang, Y. (2018). Detect-SLAM: Making Object Detection and SLAM Mutually Beneficial. *Proceedings - 2018 IEEE Winter Conference on Applications of Computer Vision, WACV 2018*, *2018*-*Janua*, 1001–1010. https://doi.org/10.1109/WACV.2018.00115

简述:

Xi: 3D point

Pt−1(Xi) : the moving probability of Xi

St(xi): the state of matched keypoint xi in keyframe $I_t$

St(xi) = 1: determinate dynamic point

St(xi) = 0: determinate static points

$\alpha$: impact factor to smooth the immediate detection result

讨论:$\alpha$如何确定的?

# Moving Probability Propagation

In the tracking thread, they estimate the moving probability of every key point frame by frame via two operations:

1) feature matching

2) matching point expansion

## **Feature matching**

$\phi$: ORB feature 这里指的是什么,距离吗?

第一行为匹配相临帧

第二行为匹配local map

第三行,其它设为初始值

## **matching point expansion**

If a point is impacted by more than one high-confidence point, we will sum all the impact of these neighboring high-confidence points.

Pinit: the initial moving probability

λ(d): distance factor

C: constant value 如何确定的?

# Mapping Objects Reconstructing

Reconstructing the environment in a map is the core ability of SLAM system, but most of the maps are built in pixel or low-level features without semantics. Recently, with the advancement of object detection, creating semantic map supported supported by object detector becomes more promising.

传统的地图缺少主义信息,现在很多研究通过结体目标物体检测来建立物体级别的地图。

## Predicting Region ID

**Purpose**: to find corresponding object ID in the object map or generate a new ID if it is detected for the first timeThe object ID prediction is based on the **geometry assumption** that the **area of projection and detection should overlap** if both areas belong to the same object. So we compute the intersection over union(IOU) between two areas to represent the level of overlapping:

R1 is the area from detection

R2 is the area from the object map projection

R1很好理解,R2是如何投影的呢?或许是对区域内的点(local map)进行了3D到2D的投影变换。

$P(R_1|R_2)$ 跟depth likelihood 有什么关系,为什么要计算depth likelihood?

这个概率是个条件概率,是什么含义?

depth likelihood 指的是什么?

## Cutting Background

They cut the background utilizing **Grab-cut algorithm**, serving points **projected area** as **seed** of foreground and points outside of the bounding box as background.

Then they get a segment mask of the target object from the bounding box, after three iterations.

## Reconstruction

Leveraging the object mask, they create object point cloud assigned with object ID and filter the noise point in 3D space. Finally, they transform the object point cloud into the world coordinate with the camera pose and insert them to the object map.

# SLAM-enhanced Detector

Semantic map can be used not only for optimizing trajectory estimation, but also improving object detector.

个人觉的这个标题容易让人误解,这里所描述的并不是真正意义上的提高object detector. 并没有对object detector算法 进行改进提高,其实跟object detector没有多少关系。

这篇论文所描述的是通过不断更新local map中的物体标签,并结合object detector得到的标签,使得Object map更具有空间连续性,更精确一些。

显然这个过程并不是提高Object detector的过程,顶多算是个浅层融合。

## Hard Example Mining

we fine-tune the original SSD network to boost detection performance in similar scenes.

这个过程是针对特殊几个物体的,虽然可以提高SLAM在极特别场景中的精确度。

但是损害了泛化性,对一般的场景就可能不适应。

如果不进行Hard Example Mining,本文的性能会如何,作者似乎没有说明。

# 讨论

1. 本文说这个算法是针对Unknown environment,但是用到的大都是比较特殊的个例,物体都是已知的, 通过这些已知先验知识来去除可能的动态物体。在未知环境是不一定有这些物体的。

2. 很多所谓的动态物体可不一定是运动的,比如实验室里的研究员,天天都是坐着不动的。

3. SLAM很重要的一个特点是针对未知环境的Unknown environment.在未知环境中去进行 Hard Example Mining是不大现实的。

4. SLAM-enhanced Detector改成SLAM-enhanced Object Map我觉的更好些,这里写得个人感觉跟Object Detector没多个关系,并不存在明显的优化提高关系。如果是说将Object Map投影到2D,然后做为Object Detector的输入参数来提高Object Detector的话,那是提高Object Detector.

5. 文章没有给出完整的Object Map

6. 实验结果有的要比原始的ORB SLAM2要差,原因何在?

7. 本文是否有在几何层级来进行运动物体检测,是否对未定义的物体进行了运动检测,文章中是虽然用了RANSAC, 但它不是运动检测。

8. 文章中用了很多参数,但基本上没说如何给定这些参数,调整这些参数对实验结果又会有多大影响?

本人并不能完全理解文章中的内容,以上仅为个人观点。

# References

Zhong, F., Wang, S., Zhang, Z., Chen, C., & Wang, Y. (2018). Detect-SLAM: Making Object Detection and SLAM Mutually Beneficial. *Proceedings - 2018 IEEE Winter Conference on Applications of Computer Vision, WACV 2018*, *2018*-*Janua*, 1001–1010. https://doi.org/10.1109/WACV.2018.00115

No comments