## 重点内容

**物体分割**: BlizNet

缺点是不能完美的分割物体,原因是可能物体太小或太远,或只出现了物体的一部分

**Dynamic Object Mask Extension**:

框中的部分是靠物体分割无法解决的部分,这部分会在PCD图上形成鬼影。

对于这部分区城通过分析 深度图像 来修补物体分割MASK的殘缺。

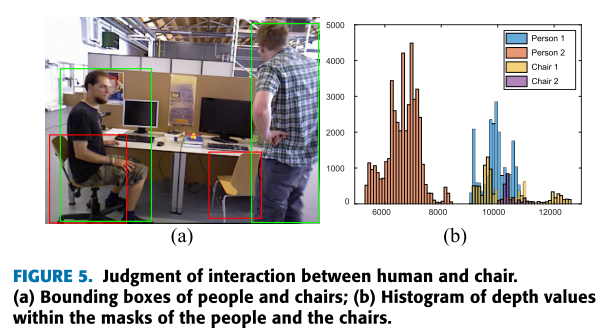

## Interaction Judgement between potential dynamic object and dynamic object

()

1) 检测区域是否相交, 确定候选的相互交互的物体 $\{P(i), O(j)\}$

2)进一步检测他们的深度分布是否有重合部分

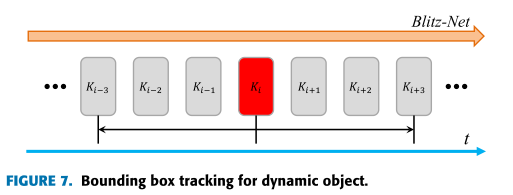

## BOUNDING BOX TRACKING:

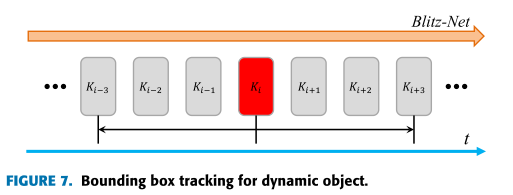

Blitz-Net 不能有效的检测较小,较远,或只出现物体一部分的目标物体。因此通过综合前后3帧的Bounding box来预测/平滑当前帧的Bounding box。 $K_t$ 是当前帧。

问题:未来的帧还没到,是如何利用的?

后面内容:

- GEOMETRIC SEGMENTATION OF DEPTH IMAGE

- FEATURE POINTS WITH STABLE DEPTH VALUES

去除没有深度的点,去除深度变化较大的点,保留深度值较稳定的点。

**LOCATION OF STATIC MATCHING POINTS**:

利用对极约束去除外点。

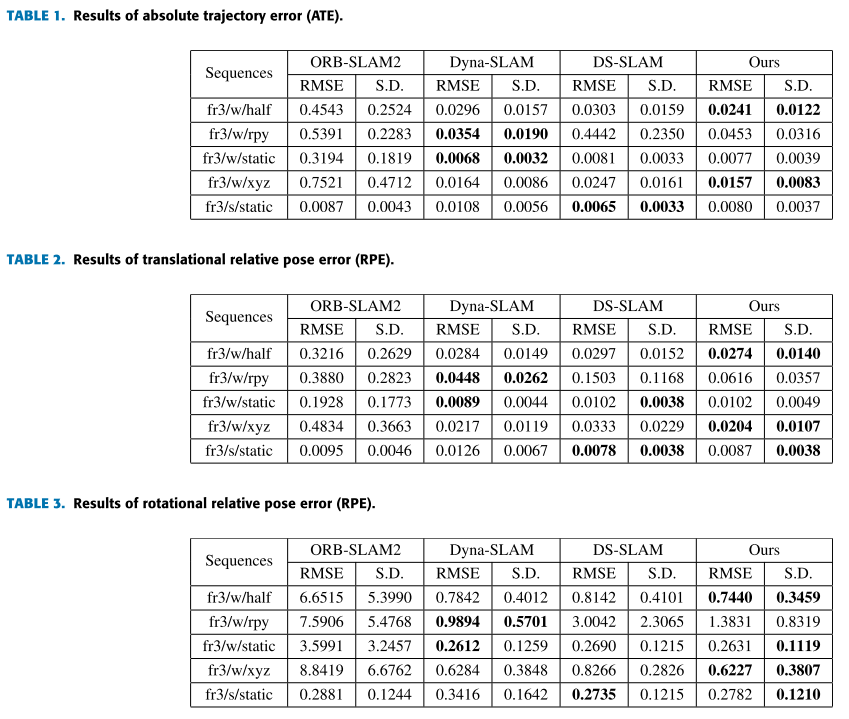

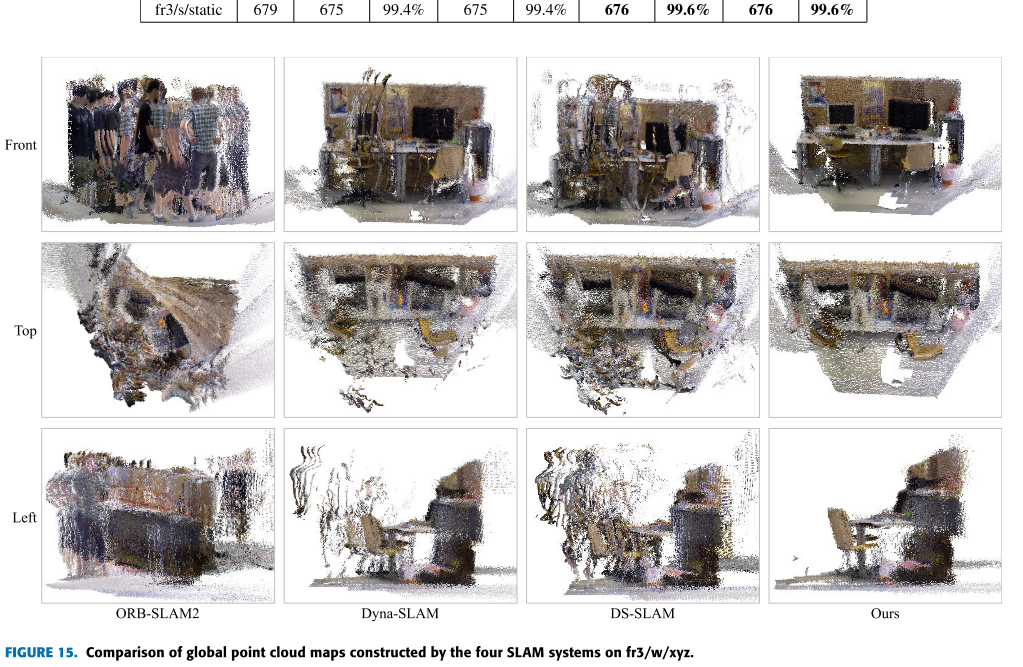

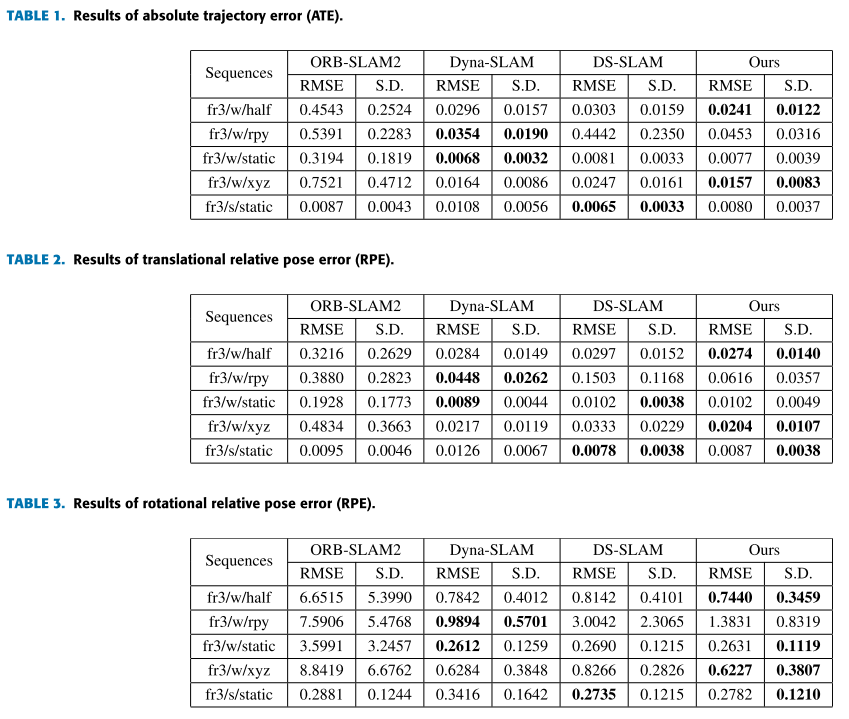

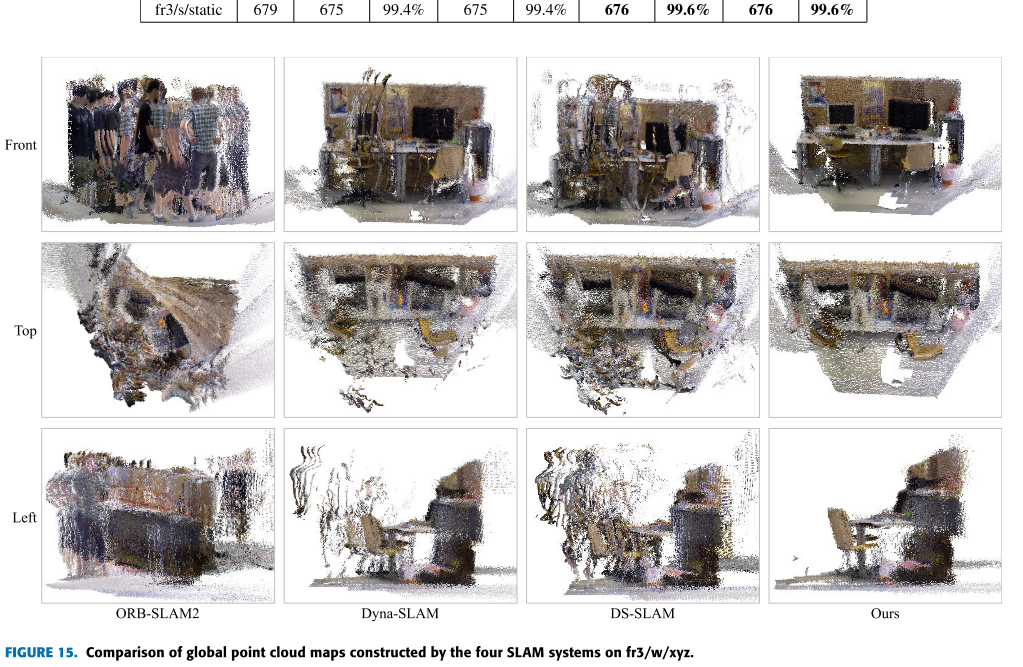

## Expereiments

# References

- Xiao, L., Wang, J., Qiu, X., Rong, Z., & Zou, X. (2019). Dynamic-SLAM: Semantic monocular visual localization and mapping based on deep learning in dynamic environment. Robotics and Autonomous Systems, 117(April), 1–16. https://doi.org/10.1016/j.robot.2019.03.012

## 重点内容

**物体分割**: BlizNet

缺点是不能完美的分割物体,原因是可能物体太小或太远,或只出现了物体的一部分

**Dynamic Object Mask Extension**:

框中的部分是靠物体分割无法解决的部分,这部分会在PCD图上形成鬼影。

对于这部分区城通过分析 深度图像 来修补物体分割MASK的殘缺。

## Interaction Judgement between potential dynamic object and dynamic object

()

1) 检测区域是否相交, 确定候选的相互交互的物体 $\{P(i), O(j)\}$

2)进一步检测他们的深度分布是否有重合部分

## BOUNDING BOX TRACKING:

Blitz-Net 不能有效的检测较小,较远,或只出现物体一部分的目标物体。因此通过综合前后3帧的Bounding box来预测/平滑当前帧的Bounding box。 $K_t$ 是当前帧。

问题:未来的帧还没到,是如何利用的?

后面内容:

- GEOMETRIC SEGMENTATION OF DEPTH IMAGE

- FEATURE POINTS WITH STABLE DEPTH VALUES

去除没有深度的点,去除深度变化较大的点,保留深度值较稳定的点。

**LOCATION OF STATIC MATCHING POINTS**:

利用对极约束去除外点。

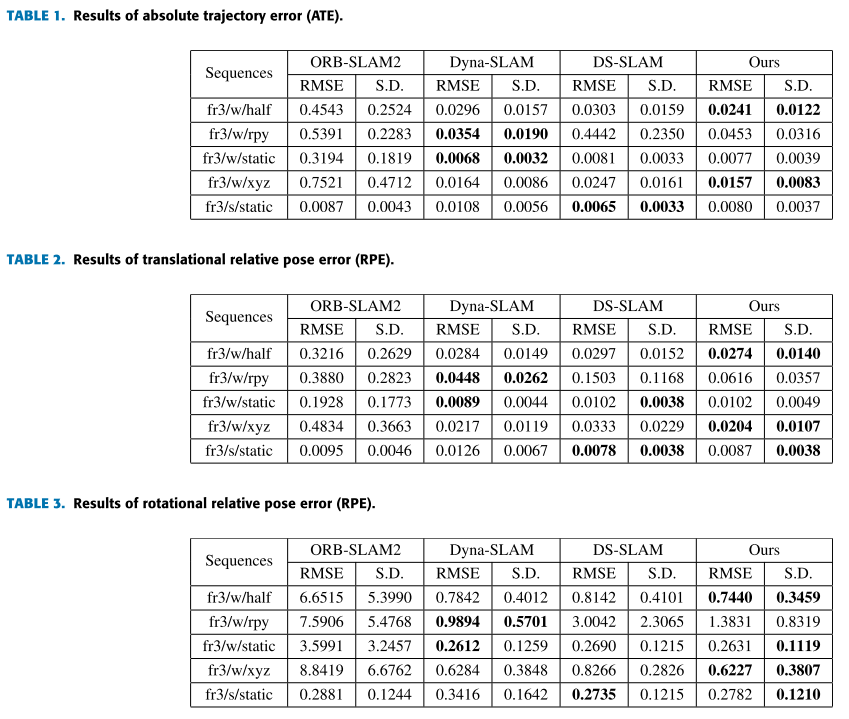

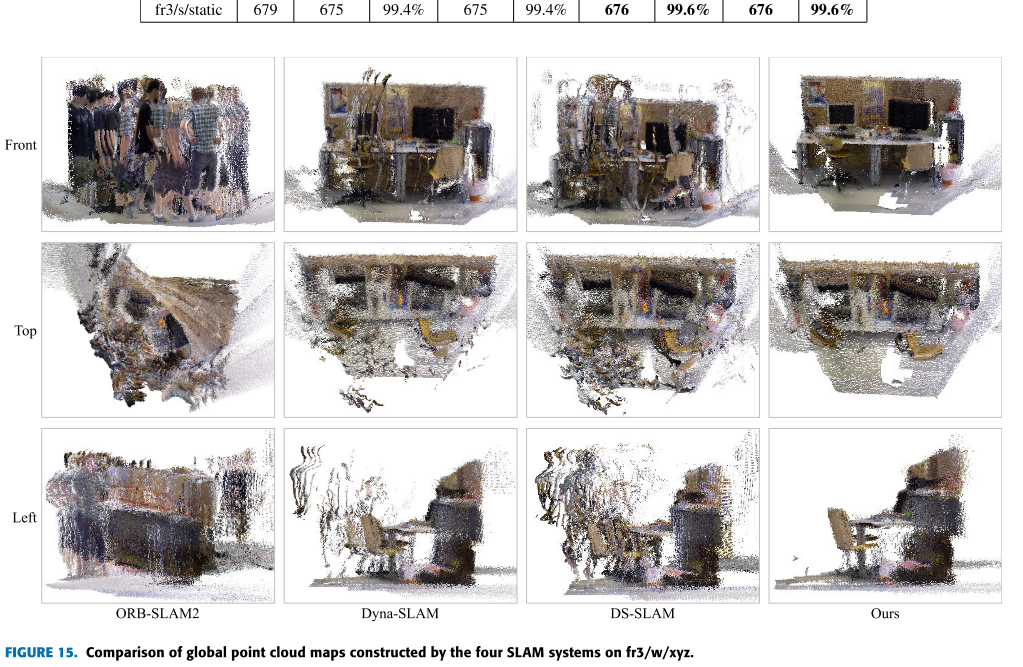

## Expereiments

# References

- Xiao, L., Wang, J., Qiu, X., Rong, Z., & Zou, X. (2019). Dynamic-SLAM: Semantic monocular visual localization and mapping based on deep learning in dynamic environment. Robotics and Autonomous Systems, 117(April), 1–16. https://doi.org/10.1016/j.robot.2019.03.012

2020 Semantic SLAM With More Accurate Point Cloud Map in Dynamic Environments [论文阅读]

[toc]

# 2020 Semantic SLAM With More Accurate Point Cloud Map in Dynamic Environments

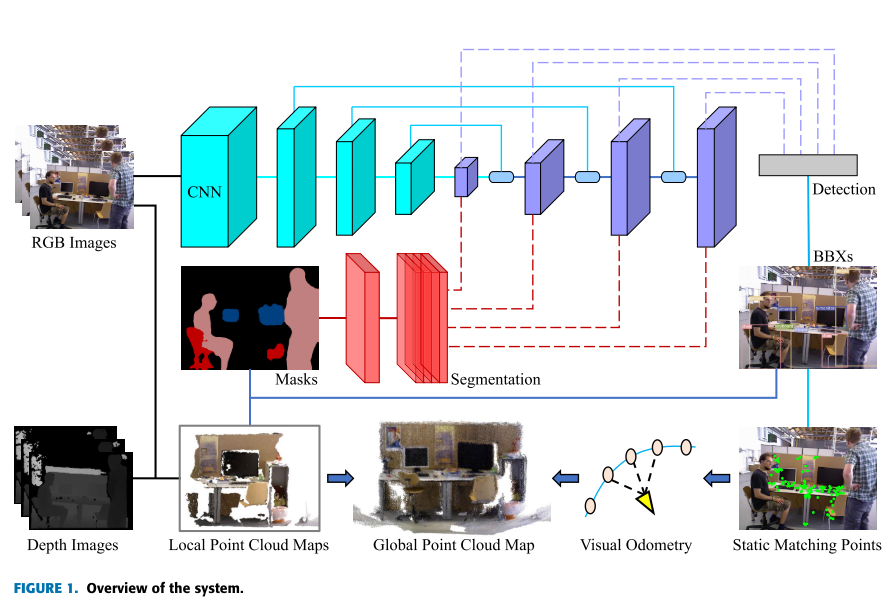

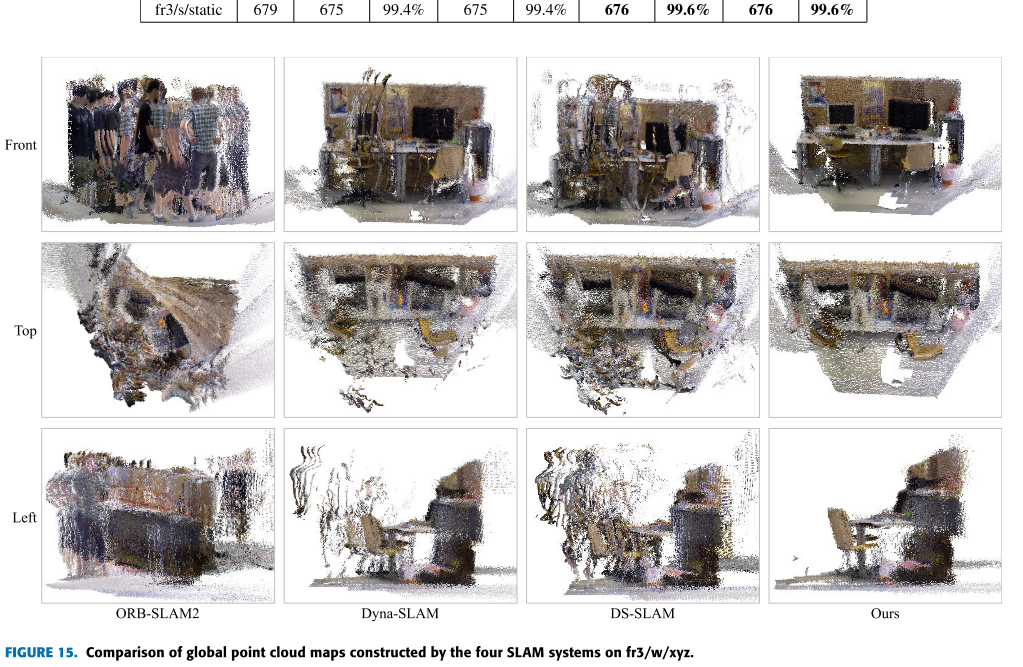

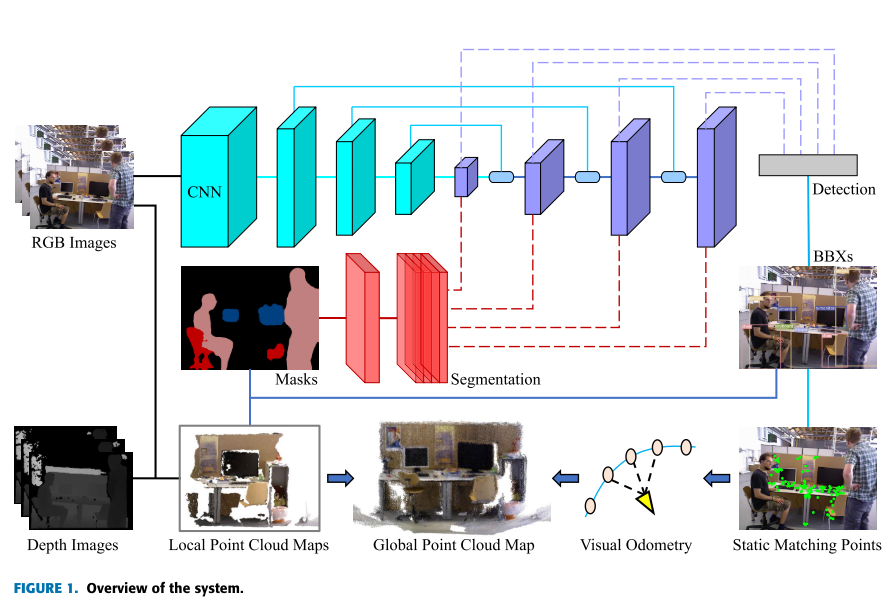

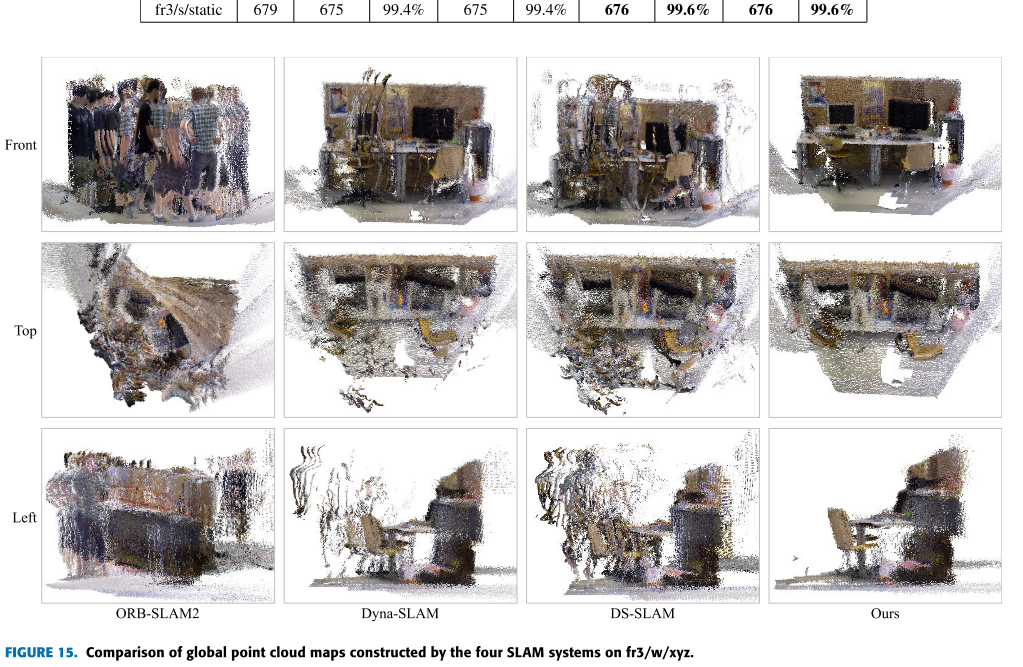

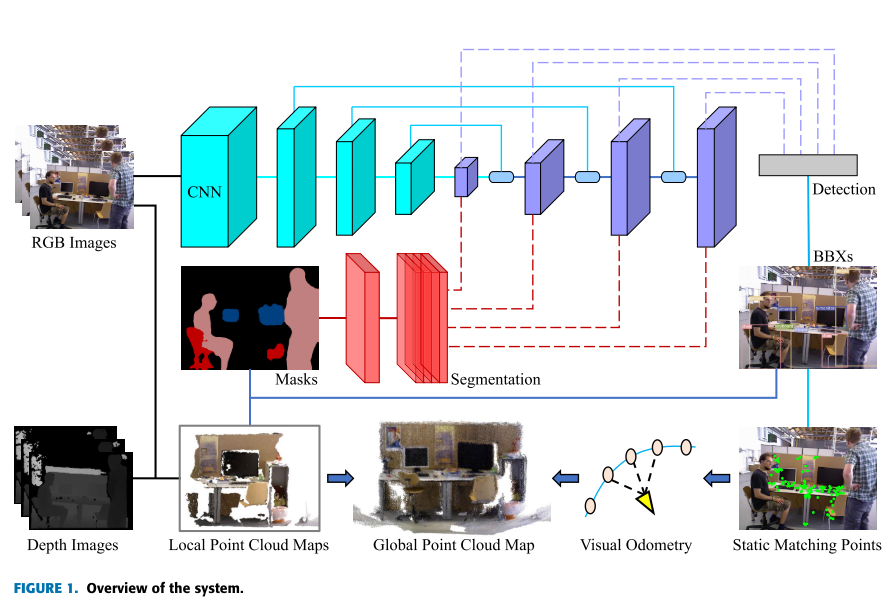

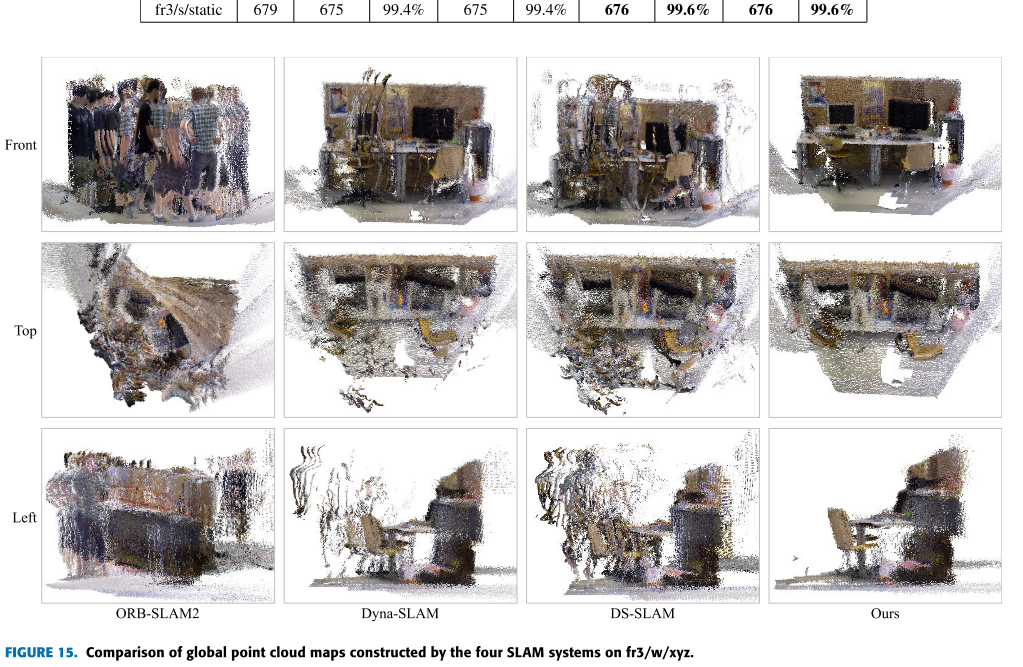

本文针对视觉SLAM的静态世界假设问题,目标是在Point Clout Map 中尽可能去除动态物体。

本文着眼点不在于提高Tracking的性能,而是建立比较干净的Point Clout Map。

## contributions

1) We extend the mask of the dynamic object to include more information about the object.

2) A bidirectional search strategy is proposed to track the bounding box of the dynamic object.

3) We integrate our approach with ORB-SLAM2 system. Evaluations and method comparisons are per- formed with the TUMRGB-D dataset. Our SLAM system can obtain clean and accurate global point cloud maps in both highly and lowly dynamic environments.

## 重点内容

**物体分割**: BlizNet

缺点是不能完美的分割物体,原因是可能物体太小或太远,或只出现了物体的一部分

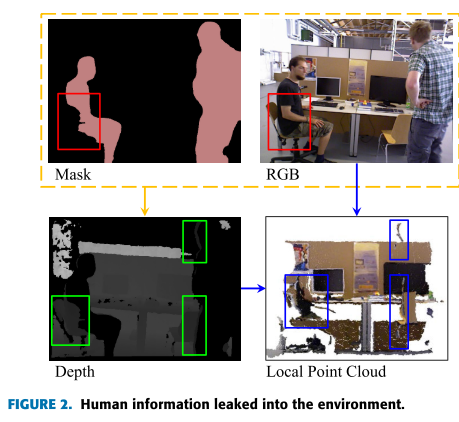

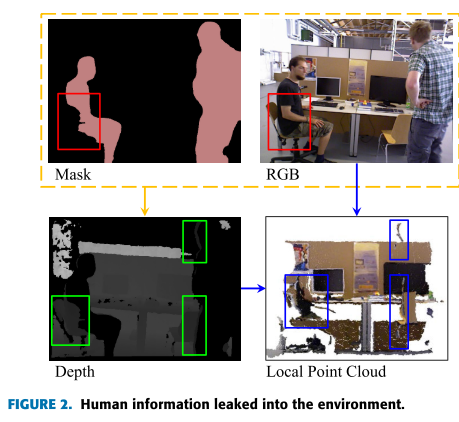

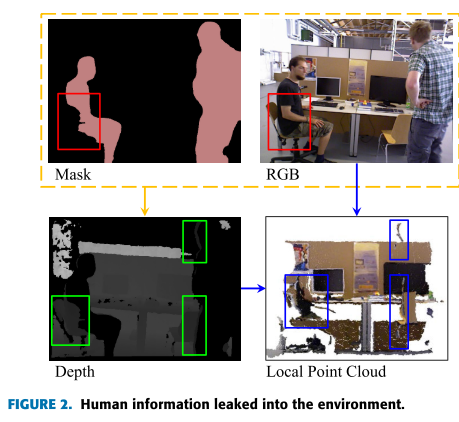

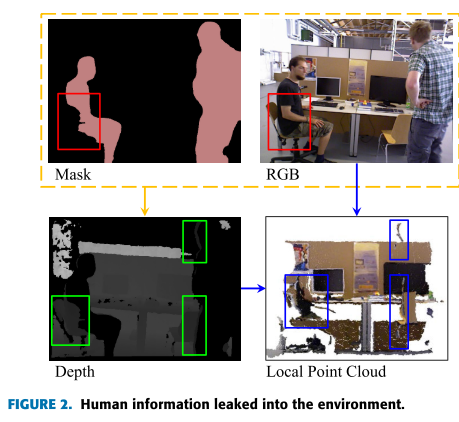

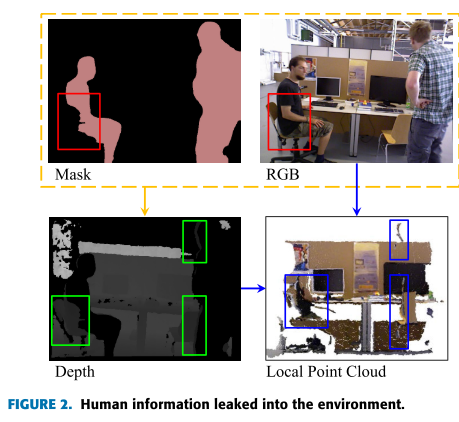

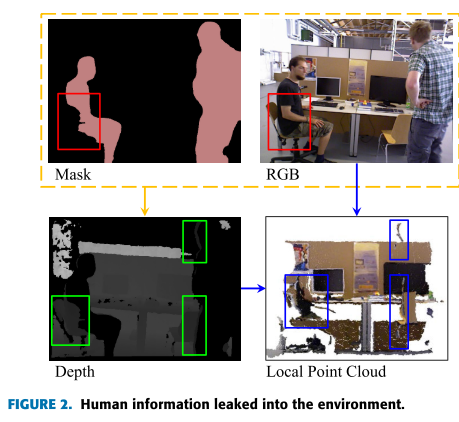

**Dynamic Object Mask Extension**:

框中的部分是靠物体分割无法解决的部分,这部分会在PCD图上形成鬼影。

对于这部分区城通过分析 深度图像 来修补物体分割MASK的殘缺。

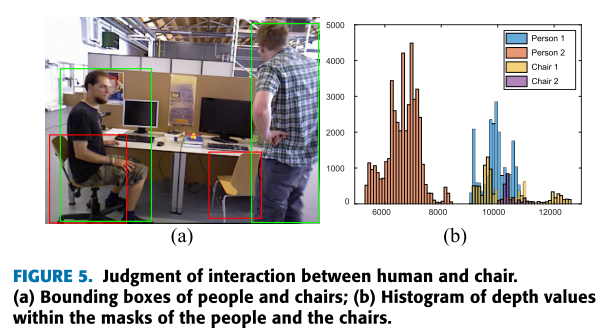

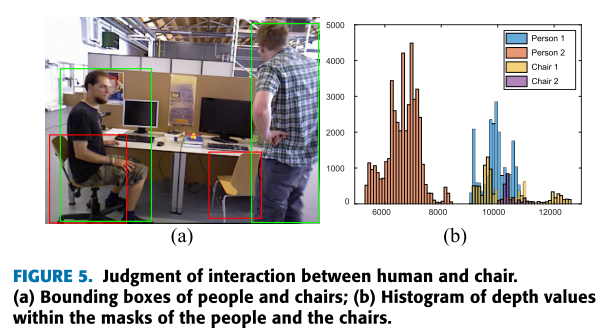

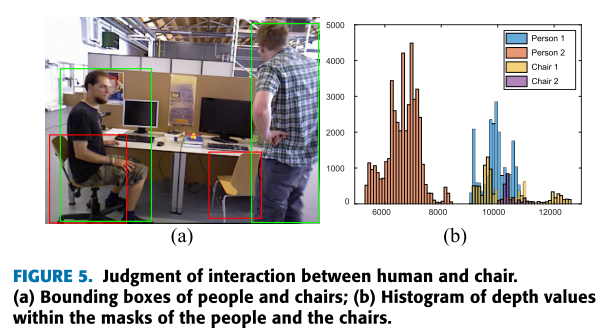

## Interaction Judgement between potential dynamic object and dynamic object

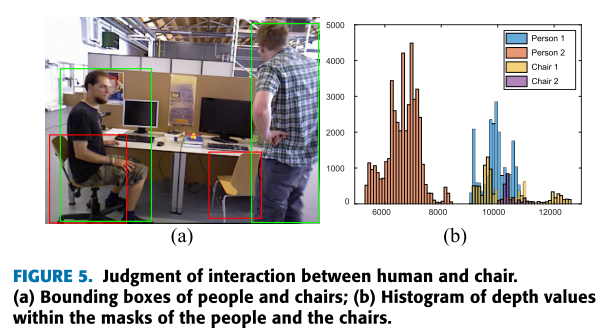

()

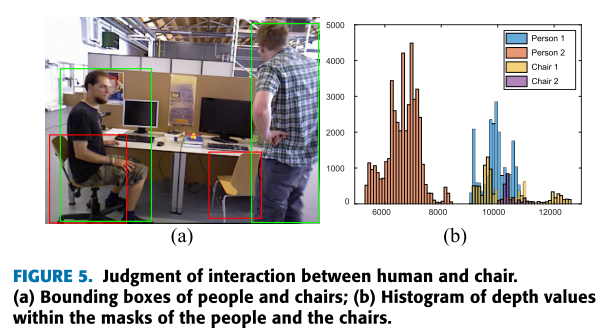

1) 检测区域是否相交, 确定候选的相互交互的物体 $\{P(i), O(j)\}$

2)进一步检测他们的深度分布是否有重合部分

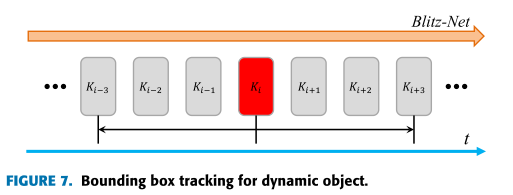

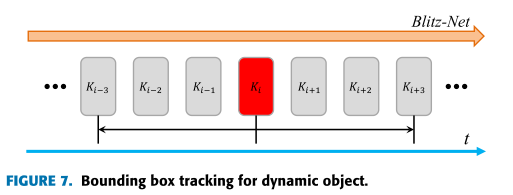

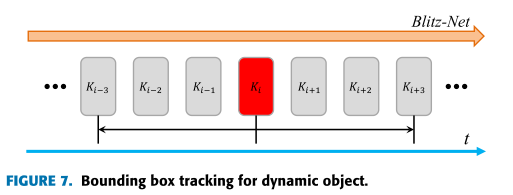

## BOUNDING BOX TRACKING:

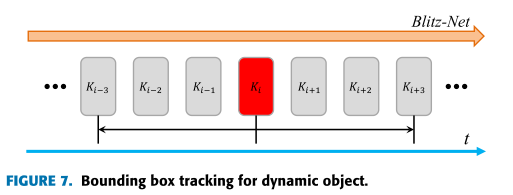

Blitz-Net 不能有效的检测较小,较远,或只出现物体一部分的目标物体。因此通过综合前后3帧的Bounding box来预测/平滑当前帧的Bounding box。 $K_t$ 是当前帧。

问题:未来的帧还没到,是如何利用的?

后面内容:

- GEOMETRIC SEGMENTATION OF DEPTH IMAGE

- FEATURE POINTS WITH STABLE DEPTH VALUES

去除没有深度的点,去除深度变化较大的点,保留深度值较稳定的点。

**LOCATION OF STATIC MATCHING POINTS**:

利用对极约束去除外点。

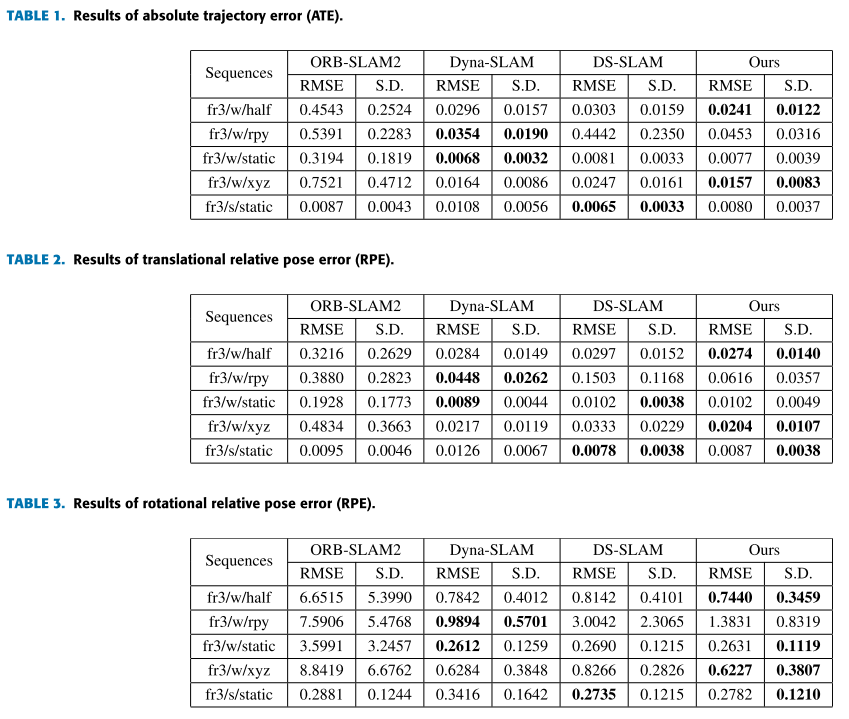

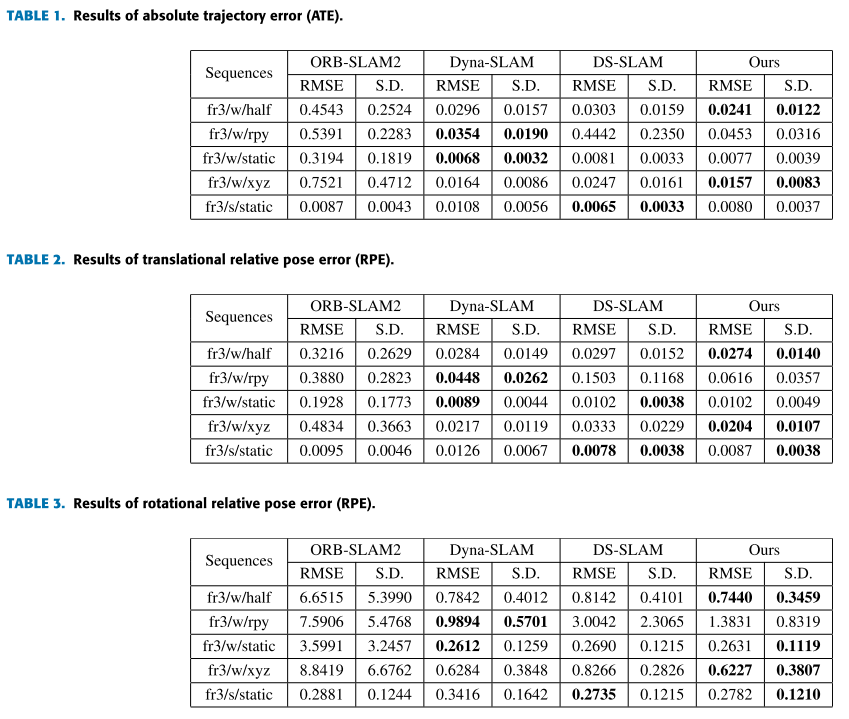

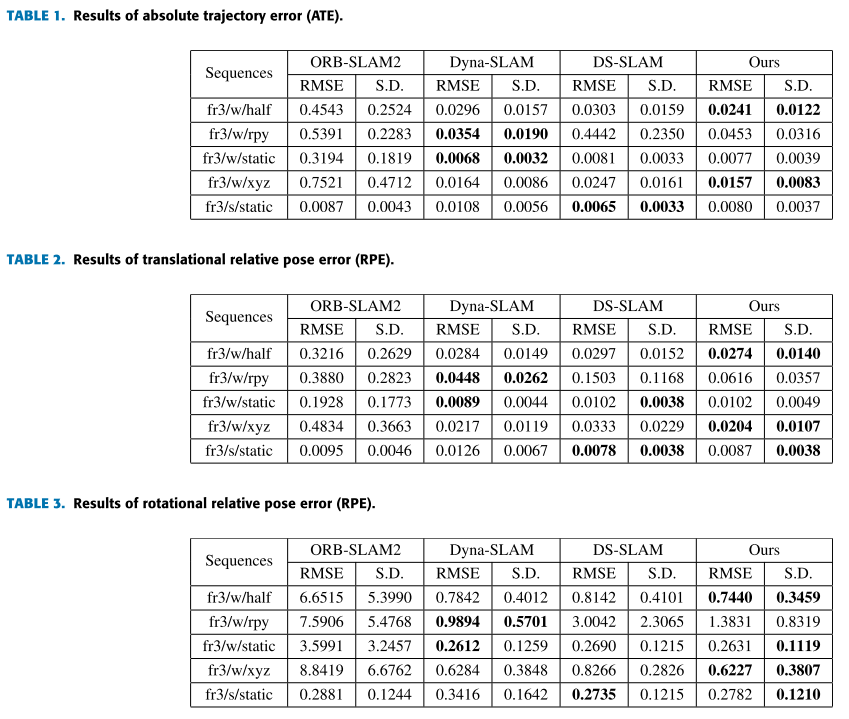

## Expereiments

# References

- Xiao, L., Wang, J., Qiu, X., Rong, Z., & Zou, X. (2019). Dynamic-SLAM: Semantic monocular visual localization and mapping based on deep learning in dynamic environment. Robotics and Autonomous Systems, 117(April), 1–16. https://doi.org/10.1016/j.robot.2019.03.012

## 重点内容

**物体分割**: BlizNet

缺点是不能完美的分割物体,原因是可能物体太小或太远,或只出现了物体的一部分

**Dynamic Object Mask Extension**:

框中的部分是靠物体分割无法解决的部分,这部分会在PCD图上形成鬼影。

对于这部分区城通过分析 深度图像 来修补物体分割MASK的殘缺。

## Interaction Judgement between potential dynamic object and dynamic object

()

1) 检测区域是否相交, 确定候选的相互交互的物体 $\{P(i), O(j)\}$

2)进一步检测他们的深度分布是否有重合部分

## BOUNDING BOX TRACKING:

Blitz-Net 不能有效的检测较小,较远,或只出现物体一部分的目标物体。因此通过综合前后3帧的Bounding box来预测/平滑当前帧的Bounding box。 $K_t$ 是当前帧。

问题:未来的帧还没到,是如何利用的?

后面内容:

- GEOMETRIC SEGMENTATION OF DEPTH IMAGE

- FEATURE POINTS WITH STABLE DEPTH VALUES

去除没有深度的点,去除深度变化较大的点,保留深度值较稳定的点。

**LOCATION OF STATIC MATCHING POINTS**:

利用对极约束去除外点。

## Expereiments

# References

- Xiao, L., Wang, J., Qiu, X., Rong, Z., & Zou, X. (2019). Dynamic-SLAM: Semantic monocular visual localization and mapping based on deep learning in dynamic environment. Robotics and Autonomous Systems, 117(April), 1–16. https://doi.org/10.1016/j.robot.2019.03.012

## 重点内容

**物体分割**: BlizNet

缺点是不能完美的分割物体,原因是可能物体太小或太远,或只出现了物体的一部分

**Dynamic Object Mask Extension**:

框中的部分是靠物体分割无法解决的部分,这部分会在PCD图上形成鬼影。

对于这部分区城通过分析 深度图像 来修补物体分割MASK的殘缺。

## Interaction Judgement between potential dynamic object and dynamic object

()

1) 检测区域是否相交, 确定候选的相互交互的物体 $\{P(i), O(j)\}$

2)进一步检测他们的深度分布是否有重合部分

## BOUNDING BOX TRACKING:

Blitz-Net 不能有效的检测较小,较远,或只出现物体一部分的目标物体。因此通过综合前后3帧的Bounding box来预测/平滑当前帧的Bounding box。 $K_t$ 是当前帧。

问题:未来的帧还没到,是如何利用的?

后面内容:

- GEOMETRIC SEGMENTATION OF DEPTH IMAGE

- FEATURE POINTS WITH STABLE DEPTH VALUES

去除没有深度的点,去除深度变化较大的点,保留深度值较稳定的点。

**LOCATION OF STATIC MATCHING POINTS**:

利用对极约束去除外点。

## Expereiments

# References

- Xiao, L., Wang, J., Qiu, X., Rong, Z., & Zou, X. (2019). Dynamic-SLAM: Semantic monocular visual localization and mapping based on deep learning in dynamic environment. Robotics and Autonomous Systems, 117(April), 1–16. https://doi.org/10.1016/j.robot.2019.03.012

## 重点内容

**物体分割**: BlizNet

缺点是不能完美的分割物体,原因是可能物体太小或太远,或只出现了物体的一部分

**Dynamic Object Mask Extension**:

框中的部分是靠物体分割无法解决的部分,这部分会在PCD图上形成鬼影。

对于这部分区城通过分析 深度图像 来修补物体分割MASK的殘缺。

## Interaction Judgement between potential dynamic object and dynamic object

()

1) 检测区域是否相交, 确定候选的相互交互的物体 $\{P(i), O(j)\}$

2)进一步检测他们的深度分布是否有重合部分

## BOUNDING BOX TRACKING:

Blitz-Net 不能有效的检测较小,较远,或只出现物体一部分的目标物体。因此通过综合前后3帧的Bounding box来预测/平滑当前帧的Bounding box。 $K_t$ 是当前帧。

问题:未来的帧还没到,是如何利用的?

后面内容:

- GEOMETRIC SEGMENTATION OF DEPTH IMAGE

- FEATURE POINTS WITH STABLE DEPTH VALUES

去除没有深度的点,去除深度变化较大的点,保留深度值较稳定的点。

**LOCATION OF STATIC MATCHING POINTS**:

利用对极约束去除外点。

## Expereiments

# References

- Xiao, L., Wang, J., Qiu, X., Rong, Z., & Zou, X. (2019). Dynamic-SLAM: Semantic monocular visual localization and mapping based on deep learning in dynamic environment. Robotics and Autonomous Systems, 117(April), 1–16. https://doi.org/10.1016/j.robot.2019.03.012

No comments